AI And Web3 Security: Assessing The Risks Of Key Access For AI Models

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

AI and Web3 Security: Assessing the Risks of Key Access for AI Models

The convergence of artificial intelligence (AI) and Web3 technologies presents exciting possibilities, but also significant security challenges. One crucial area demanding immediate attention is the risk associated with granting AI models access to cryptographic keys, the bedrock of Web3 security. This article delves into the potential vulnerabilities, explores mitigation strategies, and emphasizes the urgent need for robust security protocols in this rapidly evolving landscape.

The Allure and the Danger: AI's Role in Web3

AI is increasingly integrated into various Web3 applications, offering benefits like automated trading, improved smart contract auditing, and enhanced user experiences. However, this integration introduces a new attack surface. Many Web3 applications rely on private keys for authentication and transaction signing. If an AI model gains unauthorized access to these keys, the consequences can be catastrophic.

Key Risks of Granting AI Access to Cryptographic Keys:

-

Compromised Keys: A malicious actor could potentially manipulate or compromise the AI model itself, gaining access to the keys it manages. This could lead to theft of digital assets, manipulation of smart contracts, and significant financial losses.

-

Data Breaches: AI models often require access to substantial datasets for training and operation. If these datasets contain sensitive information like private keys, a data breach could expose users to significant risks.

-

Insider Threats: Even with robust security measures, the potential for insider threats remains. A compromised developer or employee with access to the AI model and its keys could exploit this vulnerability for malicious purposes.

-

Algorithmic Vulnerabilities: The algorithms underpinning AI models can contain vulnerabilities that malicious actors could exploit to gain control of the model and its access to cryptographic keys. This requires rigorous testing and auditing.

Mitigation Strategies: Protecting Web3 in the Age of AI

Addressing these risks necessitates a multi-pronged approach:

-

Multi-Factor Authentication (MFA): Implementing robust MFA for all access points to AI systems managing cryptographic keys is paramount. This adds an extra layer of security, making unauthorized access significantly more difficult.

-

Secure Enclaves and Hardware Security Modules (HSMs): Storing and processing keys within secure enclaves or HSMs minimizes the risk of exposure. These specialized hardware solutions offer a high degree of protection against software-based attacks.

-

Zero-Knowledge Proofs (ZKPs): ZKPs enable verification of information without revealing the underlying data. This technology can be used to authenticate transactions and prove ownership of assets without exposing private keys to the AI model.

-

Regular Security Audits: Independent security audits of AI models and associated systems are crucial for identifying and mitigating vulnerabilities before they can be exploited. This should be a continuous process.

-

Principle of Least Privilege: AI models should only be granted the minimum necessary access to cryptographic keys and other sensitive data. This minimizes the potential damage from a compromise.

The Future of AI and Web3 Security: A Collaborative Effort

The security of AI and Web3 systems is not just a technological challenge; it's a collaborative effort demanding participation from developers, security researchers, and policymakers. Open-source security tools, standardized security practices, and continuous education are all essential components in building a more secure future for this rapidly evolving technological landscape. Ignoring these risks could have devastating consequences for the entire Web3 ecosystem. The future of decentralized applications hinges on proactively addressing these crucial security concerns.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on AI And Web3 Security: Assessing The Risks Of Key Access For AI Models. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Inter Vs Barcelona Analisis Previo Posibles Goleadores Y Cuotas De Apuestas

May 01, 2025

Inter Vs Barcelona Analisis Previo Posibles Goleadores Y Cuotas De Apuestas

May 01, 2025 -

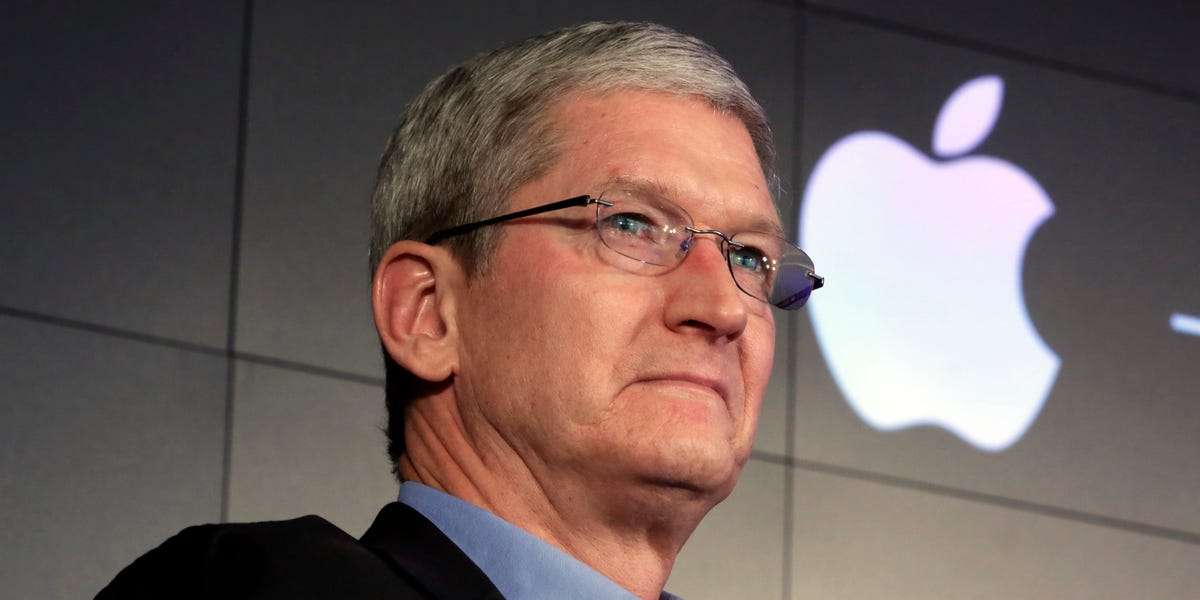

Apple Earnings Surprise Profit Beats Estimates But Service Revenue Slowdown Impacts Stock Price

May 01, 2025

Apple Earnings Surprise Profit Beats Estimates But Service Revenue Slowdown Impacts Stock Price

May 01, 2025 -

Power Outage Brings Early End To Fearnleys Madrid Open Bid

May 01, 2025

Power Outage Brings Early End To Fearnleys Madrid Open Bid

May 01, 2025 -

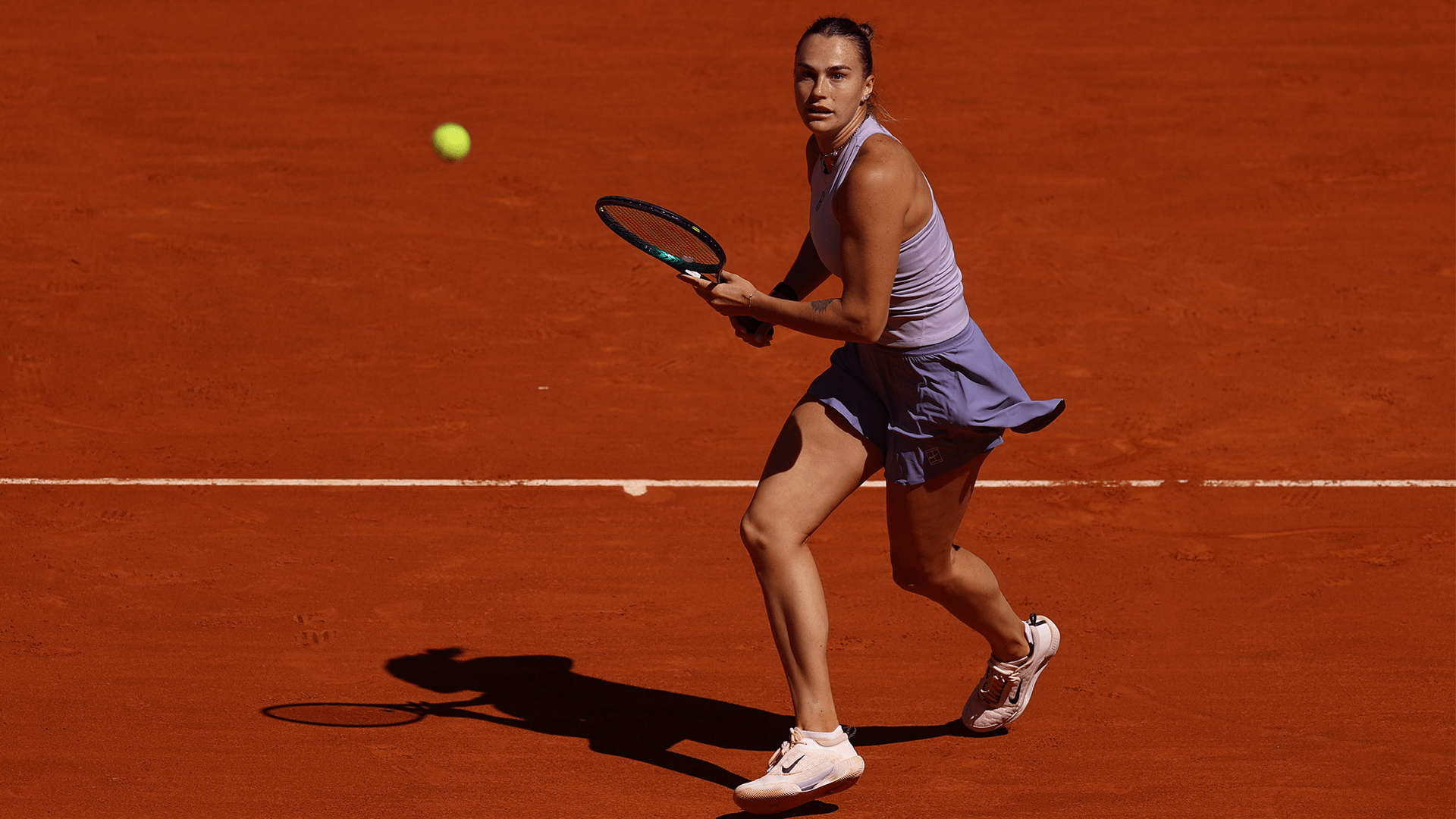

Watch Sabalenka Vs Stearns Madrid Open Match Details Betting Tips And More

May 01, 2025

Watch Sabalenka Vs Stearns Madrid Open Match Details Betting Tips And More

May 01, 2025 -

Nueva Iluminacion Spotify Camp Nou Caracteristicas Y Tecnologia

May 01, 2025

Nueva Iluminacion Spotify Camp Nou Caracteristicas Y Tecnologia

May 01, 2025