AI Superchip Showdown: Analyzing Cerebras WSE-3 And Nvidia B200 Capabilities

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

AI Superchip Showdown: Cerebras WSE-3 vs. Nvidia B200 – Which Reigns Supreme?

The world of artificial intelligence is experiencing a thrilling arms race, not of weaponry, but of processing power. At the forefront are colossal AI superchips, pushing the boundaries of what's possible in machine learning, deep learning, and large language models. This article delves into a head-to-head comparison of two titans in this arena: Cerebras' WSE-3 and Nvidia's B200, analyzing their capabilities and implications for the future of AI.

Cerebras WSE-3: The Colossus of Connectivity

Cerebras' WSE-3 isn't just a chip; it's a system-on-a-chip (SoC) boasting an unprecedented scale. With a staggering 1.2 trillion transistors and 2.6 billion cores, it represents a monumental leap forward in processing power. Its unique architecture, featuring a massive interconnected fabric of processing units, minimizes communication latency, a significant bottleneck in traditional multi-chip systems. This allows for significantly faster training of massive AI models.

- Key Features:

- Massive scale: 1.2 trillion transistors, 2.6 billion cores

- High bandwidth interconnect: Minimizes communication latency

- Unified memory space: Simplifies programming and improves efficiency

- Designed for large language models (LLMs) and generative AI: Optimized for tasks requiring immense processing power.

Nvidia B200: The Modular Powerhouse

Nvidia's B200, while not reaching the sheer scale of the WSE-3 in a single unit, employs a modular approach. It's designed to be interconnected, scaling up processing power by combining multiple chips. This offers flexibility, allowing users to tailor their system to their specific computational needs. While individual chip specs are less impressive on paper compared to WSE-3, the modularity provides immense scalability.

- Key Features:

- Modular design: Scalable processing power through interconnected chips

- High-bandwidth NVLink interconnect: Enables efficient communication between chips

- Strong software ecosystem: Leverages Nvidia's CUDA platform and extensive libraries

- Wide adoption in existing high-performance computing (HPC) infrastructure: Easier integration into existing systems.

The Showdown: Performance and Applications

Directly comparing the raw performance of the WSE-3 and B200 is challenging due to a lack of publicly available benchmarks on identical tasks. However, we can analyze their strengths and ideal use cases.

The WSE-3 excels in tasks demanding massive parallel processing and unified memory access, making it a strong contender for training extremely large language models and complex generative AI applications. Its monolithic design simplifies programming and minimizes communication overhead.

The B200's modularity offers flexibility and scalability, making it a strong option for a broader range of applications. Its compatibility with existing Nvidia infrastructure and its strong software ecosystem makes it easier to integrate into various HPC environments. This makes it versatile across various AI tasks, from research to deployment.

The Verdict: No Single Winner

Ultimately, declaring a single "winner" in this superchip showdown is premature and misleading. The optimal choice depends heavily on the specific application and computational requirements. The Cerebras WSE-3 represents a radical approach, focusing on sheer scale and unified memory for specific high-demand tasks. The Nvidia B200, with its modularity and broad ecosystem, offers flexibility and scalability for a wider range of AI applications. Both chips are groundbreaking advancements, driving the field of AI forward at an unprecedented pace. The future likely holds a landscape where both these architectures, and others yet to emerge, play crucial roles in shaping the next generation of AI.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on AI Superchip Showdown: Analyzing Cerebras WSE-3 And Nvidia B200 Capabilities. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

The Ultimate Robot Lawn Mower Test Injuries And Surprising Results

May 10, 2025

The Ultimate Robot Lawn Mower Test Injuries And Surprising Results

May 10, 2025 -

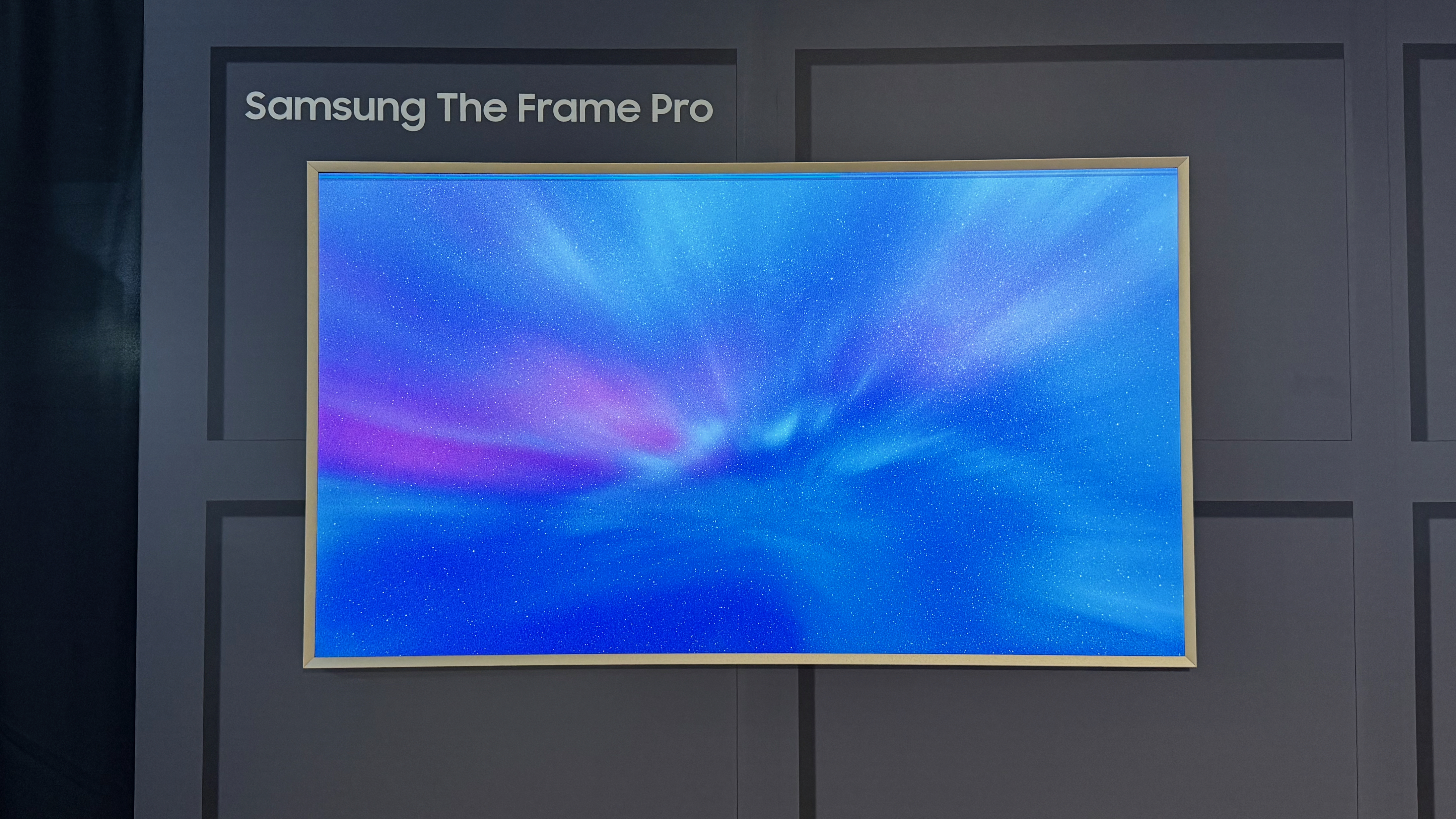

From The Frame To The Frame Pro A Detailed Comparison And Review

May 10, 2025

From The Frame To The Frame Pro A Detailed Comparison And Review

May 10, 2025 -

Amazons Sense Of Touch Robot Revolutionizing Warehouse Automation

May 10, 2025

Amazons Sense Of Touch Robot Revolutionizing Warehouse Automation

May 10, 2025 -

Warren Buffett Disminuye Su Participacion En Apple En Un 13 Analisis De La Decision

May 10, 2025

Warren Buffett Disminuye Su Participacion En Apple En Un 13 Analisis De La Decision

May 10, 2025 -

Gen Z Loyalty Will Androids Redesign Sway I Phone Users

May 10, 2025

Gen Z Loyalty Will Androids Redesign Sway I Phone Users

May 10, 2025

Latest Posts

-

Federal Hiring Freeze Leaves Oklahoma Lake Visitors High And Dry This Summer

May 11, 2025

Federal Hiring Freeze Leaves Oklahoma Lake Visitors High And Dry This Summer

May 11, 2025 -

Score Big Savings Mothers Day 2025 Restaurant Deals

May 11, 2025

Score Big Savings Mothers Day 2025 Restaurant Deals

May 11, 2025 -

Welfare Cuts Spark Major Backlash Against Keir Starmer

May 11, 2025

Welfare Cuts Spark Major Backlash Against Keir Starmer

May 11, 2025 -

Ending An Era Bill Gates Foundation Closure And Massive 20 Year Giving Plan Unveiled

May 11, 2025

Ending An Era Bill Gates Foundation Closure And Massive 20 Year Giving Plan Unveiled

May 11, 2025 -

Entendiendo A Xabi Explorando El Origen De Sus Deseos

May 11, 2025

Entendiendo A Xabi Explorando El Origen De Sus Deseos

May 11, 2025