AI Superchip Showdown: Cerebras WSE-3 Vs. Nvidia B200 Performance Benchmarks

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

AI Superchip Showdown: Cerebras WSE-3 vs. Nvidia B200 Performance Benchmarks

The AI chip market is heating up, and two titans are vying for the top spot: Cerebras' WSE-3 and Nvidia's B200. Both boast groundbreaking architecture and massive processing power, promising to revolutionize large language models (LLMs) and accelerate the pace of AI development. But which chip reigns supreme? This article dives deep into the performance benchmarks of these behemoths, comparing their strengths and weaknesses to help you understand the implications for the future of AI.

Cerebras WSE-3: The Colossus of Connectivity

The Cerebras WSE-3 isn't just a chip; it's a massive wafer-scale engine boasting a staggering 120 trillion transistors. This unparalleled scale allows for unprecedented levels of on-chip communication, eliminating the bottlenecks often found in traditional multi-chip systems. This high bandwidth interconnect is key to its exceptional performance in handling massive AI models.

- Key Features: Wafer-scale architecture, 2.6 billion cores, massive on-chip memory, ultra-high bandwidth interconnect.

- Strengths: Exceptional performance in large-scale model training, minimal data movement overhead.

- Weaknesses: High cost, limited availability, specialized software ecosystem.

Nvidia B200: The Scalable Powerhouse

Nvidia's B200, part of the Hopper architecture, takes a different approach. While not wafer-scale, it leverages advanced interconnect technologies like NVLink and NVSwitch to scale performance across multiple GPUs. This allows for impressive scalability, making it a versatile option for various AI workloads.

- Key Features: Hopper architecture, massive memory capacity, high interconnect bandwidth (via NVLink), extensive software support.

- Strengths: Scalability, broad software ecosystem, wider availability, established support network.

- Weaknesses: Potentially higher latency due to inter-GPU communication, increased complexity in managing large clusters.

Performance Benchmarks: A Head-to-Head Comparison

Direct comparisons are challenging due to varying benchmark methodologies and proprietary results often released by manufacturers. However, leaked and publicly available information reveals some key differences:

-

Large Language Model Training: Initial benchmarks suggest the Cerebras WSE-3 demonstrates superior performance in training extremely large language models, largely due to its reduced communication overhead. However, the Nvidia B200 can achieve comparable results by scaling across multiple GPUs, albeit with potentially higher energy consumption.

-

Inference Performance: Both chips excel at inference (running trained models), but the Nvidia B200's broad software support and established ecosystem might give it an edge in deployment and ease of use for diverse applications.

-

Energy Efficiency: Energy consumption is a crucial factor. While precise comparisons remain scarce, the massive scale of the Cerebras WSE-3 might present challenges in overall energy efficiency compared to a more modular approach like the Nvidia B200.

The Verdict: It Depends on Your Needs

There's no single "winner" in this AI superchip showdown. The optimal choice depends heavily on specific needs and priorities:

-

Choose Cerebras WSE-3 if: You require unparalleled performance in training exceptionally large language models and have the resources to handle its specialized ecosystem and high cost.

-

Choose Nvidia B200 if: You need a highly scalable solution with broad software support, a readily available ecosystem, and prefer a more modular approach to deployment.

The ongoing evolution of AI hardware is exciting. Both Cerebras and Nvidia are pushing the boundaries of what's possible, and future benchmarks and real-world applications will further refine our understanding of their comparative strengths and weaknesses. This competition is ultimately beneficial for the entire AI community, driving innovation and accelerating progress in artificial intelligence.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on AI Superchip Showdown: Cerebras WSE-3 Vs. Nvidia B200 Performance Benchmarks. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Magento Supply Chain Flaw Exposes Hundreds Of Top E Commerce Sites To Attack

May 08, 2025

Magento Supply Chain Flaw Exposes Hundreds Of Top E Commerce Sites To Attack

May 08, 2025 -

Travel Alert Uk Foreign Office Updates Travel Warning For Favorite Holiday Spot

May 08, 2025

Travel Alert Uk Foreign Office Updates Travel Warning For Favorite Holiday Spot

May 08, 2025 -

Final Destination Bloodlines Directors Claim World Record For Oldest Person Set On Fire

May 08, 2025

Final Destination Bloodlines Directors Claim World Record For Oldest Person Set On Fire

May 08, 2025 -

Jaylin Williams His Journey From Arkansas To The Nbas Okc Thunder

May 08, 2025

Jaylin Williams His Journey From Arkansas To The Nbas Okc Thunder

May 08, 2025 -

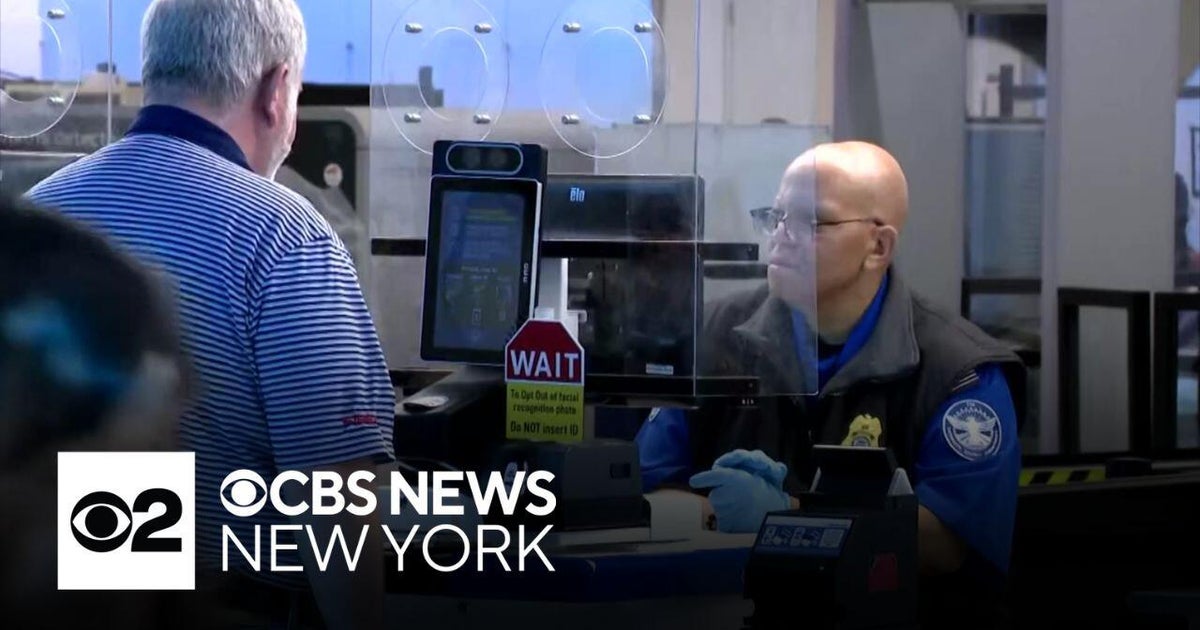

Tsa Extends Real Id Deadline Your Updated Guide To Air Travel

May 08, 2025

Tsa Extends Real Id Deadline Your Updated Guide To Air Travel

May 08, 2025

Latest Posts

-

Five Victims Of Revenge Killing In Tattle Aali History Of Conflict

May 08, 2025

Five Victims Of Revenge Killing In Tattle Aali History Of Conflict

May 08, 2025 -

Firebirds On The Road Analyzing The Teams Prospects For Success

May 08, 2025

Firebirds On The Road Analyzing The Teams Prospects For Success

May 08, 2025 -

Cost Of Living Payments Dwp Issues Definitive Decision

May 08, 2025

Cost Of Living Payments Dwp Issues Definitive Decision

May 08, 2025 -

Previsoes Economicas Copom Ipca Industria E O Desempenho Da China

May 08, 2025

Previsoes Economicas Copom Ipca Industria E O Desempenho Da China

May 08, 2025 -

Grand Theft Auto Vi Trailer Breakdown Exploring The Bonnie And Clyde Narrative

May 08, 2025

Grand Theft Auto Vi Trailer Breakdown Exploring The Bonnie And Clyde Narrative

May 08, 2025