AI's Evolving Threat: Experts Jon Twigge And Brian Wang On Future Survival Strategies

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

AI's Evolving Threat: Experts Jon Twigge and Brian Wang on Future Survival Strategies

The rapid advancement of artificial intelligence (AI) is sparking both excitement and apprehension. While AI promises revolutionary advancements in various sectors, concerns about its potential misuse and existential threats are growing louder. Leading AI experts Jon Twigge and Brian Wang recently shared their insights on navigating this evolving landscape and developing effective survival strategies for humanity. Their perspectives offer a crucial framework for understanding and mitigating the risks associated with unchecked AI development.

The Growing Concerns: Beyond Technological Singularity

The conversation surrounding AI often centers on the hypothetical technological singularity – a point where AI surpasses human intelligence, potentially leading to unpredictable consequences. However, Twigge and Wang highlight more immediate concerns. They emphasize the potential for malicious actors to leverage AI for widespread harm, including:

- Autonomous Weapons Systems: The development of lethal autonomous weapons (LAWs) raises serious ethical and security concerns. The lack of human control in such systems could lead to devastating unintended consequences.

- AI-Driven Disinformation Campaigns: The sophistication of AI allows for the creation of highly realistic deepfakes and the automated spread of misinformation, potentially destabilizing societies and undermining democratic processes.

- Economic Disruption: Rapid automation powered by AI could lead to widespread job displacement, exacerbating existing economic inequalities and social unrest.

- Algorithmic Bias and Discrimination: AI systems trained on biased data can perpetuate and amplify existing societal biases, leading to unfair or discriminatory outcomes in areas like criminal justice, loan applications, and hiring processes.

Survival Strategies: A Multi-faceted Approach

Both Twigge and Wang advocate for a multi-pronged approach to mitigate these risks. This strategy necessitates collaboration between governments, researchers, and the tech industry. Key elements include:

1. International Regulation and Cooperation: Wang emphasizes the crucial need for international agreements and regulations governing AI development and deployment. He argues that a coordinated global effort is essential to prevent a catastrophic arms race in AI weaponry and to establish ethical guidelines for AI research.

2. Investing in AI Safety Research: Twigge highlights the importance of dedicated research into AI safety and alignment. This includes developing techniques to ensure that AI systems remain aligned with human values and goals, preventing unintended consequences from emerging.

3. Promoting AI Literacy and Education: Both experts stress the importance of educating the public about AI's capabilities and limitations. Increased public understanding will help foster informed discussions about AI's societal impact and promote responsible innovation.

4. Fostering Transparency and Accountability: Transparency in AI algorithms and their decision-making processes is vital for identifying and mitigating biases. Accountability mechanisms are also needed to hold developers and deployers of AI systems responsible for their actions.

5. Ethical Frameworks for AI Development: The development of robust ethical frameworks is crucial to guide AI research and deployment. These frameworks should prioritize human well-being, fairness, and sustainability.

Looking Ahead: The Urgent Need for Action

The potential benefits of AI are immense, but so are the risks. Twigge and Wang's insights underscore the urgent need for proactive measures to ensure that AI development is guided by ethical principles and safeguards against potential harms. Ignoring these risks could have catastrophic consequences. The future of humanity may depend on our ability to navigate the challenges posed by AI responsibly and collaboratively. The time for decisive action is now.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on AI's Evolving Threat: Experts Jon Twigge And Brian Wang On Future Survival Strategies. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Understanding The Paps Punggol Grc Team And Their Priorities

Apr 28, 2025

Understanding The Paps Punggol Grc Team And Their Priorities

Apr 28, 2025 -

Venezia Match A Crucial Test For Milans Revamped System

Apr 28, 2025

Venezia Match A Crucial Test For Milans Revamped System

Apr 28, 2025 -

Solid State Power Bank Revolution Kuxius Premium Offering And Its Extended Battery Life

Apr 28, 2025

Solid State Power Bank Revolution Kuxius Premium Offering And Its Extended Battery Life

Apr 28, 2025 -

Spain And Portugal Grounded Widespread Blackout Causes Travel Havoc

Apr 28, 2025

Spain And Portugal Grounded Widespread Blackout Causes Travel Havoc

Apr 28, 2025 -

Preview Stevenage Vs Rotherham United Predicted Xi And Match Outcome

Apr 28, 2025

Preview Stevenage Vs Rotherham United Predicted Xi And Match Outcome

Apr 28, 2025

Latest Posts

-

Unrecognizable The Rocks Jaw Dropping Transformation For 40 M Project

Apr 29, 2025

Unrecognizable The Rocks Jaw Dropping Transformation For 40 M Project

Apr 29, 2025 -

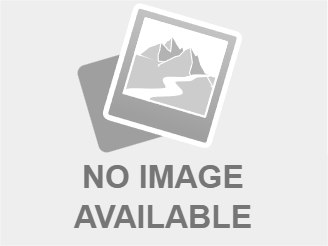

Tensions Rise Trump Confronts Bezos Over Amazon Tariff Strategy

Apr 29, 2025

Tensions Rise Trump Confronts Bezos Over Amazon Tariff Strategy

Apr 29, 2025 -

Urgent Evacuations Ordered Wildfire Races Southeast Of Tucson

Apr 29, 2025

Urgent Evacuations Ordered Wildfire Races Southeast Of Tucson

Apr 29, 2025 -

Huawei Launches Fastest Ai Chip Amidst Us Restrictions On Nvidia H20 Exports

Apr 29, 2025

Huawei Launches Fastest Ai Chip Amidst Us Restrictions On Nvidia H20 Exports

Apr 29, 2025 -

Arsenals Champions League Hope Hinges On Stopping Psg Star

Apr 29, 2025

Arsenals Champions League Hope Hinges On Stopping Psg Star

Apr 29, 2025