Apple's Dictation Software Glitch: IPhones Replacing "Trump" With "Racist"

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

<h1>Apple's Dictation Software Glitch: iPhones Replacing "Trump" with "Racist"</h1>

Apple users have reported a bizarre glitch in the company's dictation software, where the word "Trump" is being automatically replaced with "racist." This unexpected behavior has sparked widespread discussion online, raising questions about potential biases in AI algorithms and the challenges of developing truly neutral language processing systems.

<h2>A Widespread Issue?</h2>

The issue, first reported on social media platforms like Twitter and Reddit, appears to be affecting a significant number of iPhone users. Many users have shared screenshots and videos showcasing the glitch, confirming the consistent replacement of "Trump" with "racist" regardless of the context. While Apple has yet to officially acknowledge the problem, the sheer volume of reports suggests a widespread software bug rather than isolated incidents. This isn't simply a case of predictive text suggesting an alternative word; the software actively replaces "Trump" with "racist," even when explicitly typed.

<h2>AI Bias: A Growing Concern</h2>

This incident highlights the growing concern surrounding bias in artificial intelligence. Large language models, like those powering Apple's dictation software, are trained on massive datasets of text and code. If these datasets contain biases – for example, a disproportionate number of negative associations with a particular political figure – the resulting AI may inherit and amplify these biases. The fact that the glitch specifically targets "Trump" suggests a potential bias within the training data used by Apple's dictation algorithm. This isn't the first time AI bias has surfaced; similar incidents have been documented with other AI systems, underscoring the importance of carefully curating and analyzing training data.

<h3>What Could Be Causing This?</h3>

Several theories are circulating regarding the root cause:

- Biased Training Data: The most likely culprit is skewed data used to train the AI. Negative sentiment surrounding Donald Trump may be overrepresented in the data Apple used.

- Software Bug: It's possible this is a simple coding error that inadvertently triggers this specific replacement.

- Accidental Trigger Word: A less likely scenario is that "Trump" inadvertently activates a specific filter or replacement rule intended for other purposes.

<h2>Apple's Response (or Lack Thereof)</h2>

As of yet, Apple has remained silent on the matter. The absence of an official statement leaves users frustrated and uncertain about when a fix can be expected. The company’s silence only fuels speculation and further emphasizes the need for transparency regarding the development and deployment of AI-powered features. The lack of immediate action underscores the potential for significant reputational damage if the issue persists.

<h2>The Implications for AI Development</h2>

This incident serves as a cautionary tale for the AI development community. It underscores the critical need for rigorous testing, bias detection, and continuous monitoring of AI systems to ensure fairness and prevent unintended consequences. Developers must prioritize the ethical implications of AI and actively work to mitigate biases in their algorithms. The future of AI depends on addressing these challenges head-on. Ignoring such issues can lead to further erosion of public trust in AI technology.

<h2>What Users Can Do</h2>

Until Apple releases a fix, users can try the following workarounds:

- Type Instead of Dictate: The most straightforward solution is to avoid using dictation when referring to "Trump."

- Use Alternative Phrasing: Rephrase your sentences to avoid using the word "Trump" altogether.

- Wait for an Update: The most likely solution is to wait for Apple to release a software update addressing this glitch.

This unexpected glitch highlights the complex interplay between technology, politics, and bias. The ongoing situation demands a thorough investigation from Apple and a broader conversation about the ethical implications of AI development. We will continue to update this article as more information becomes available.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Apple's Dictation Software Glitch: IPhones Replacing "Trump" With "Racist". We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

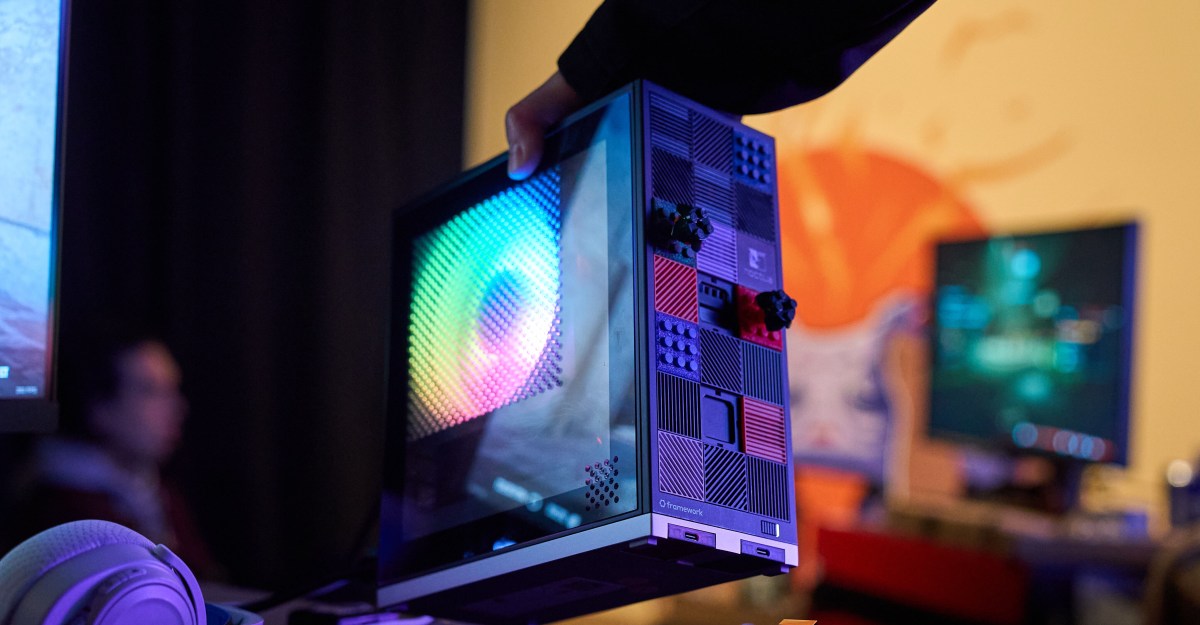

Framework Desktop A Deep Dive Into Its Customizable Gaming Potential

Feb 28, 2025

Framework Desktop A Deep Dive Into Its Customizable Gaming Potential

Feb 28, 2025 -

Collectors Item Alert Metal Mario Debuts In Hot Wheels Series

Feb 28, 2025

Collectors Item Alert Metal Mario Debuts In Hot Wheels Series

Feb 28, 2025 -

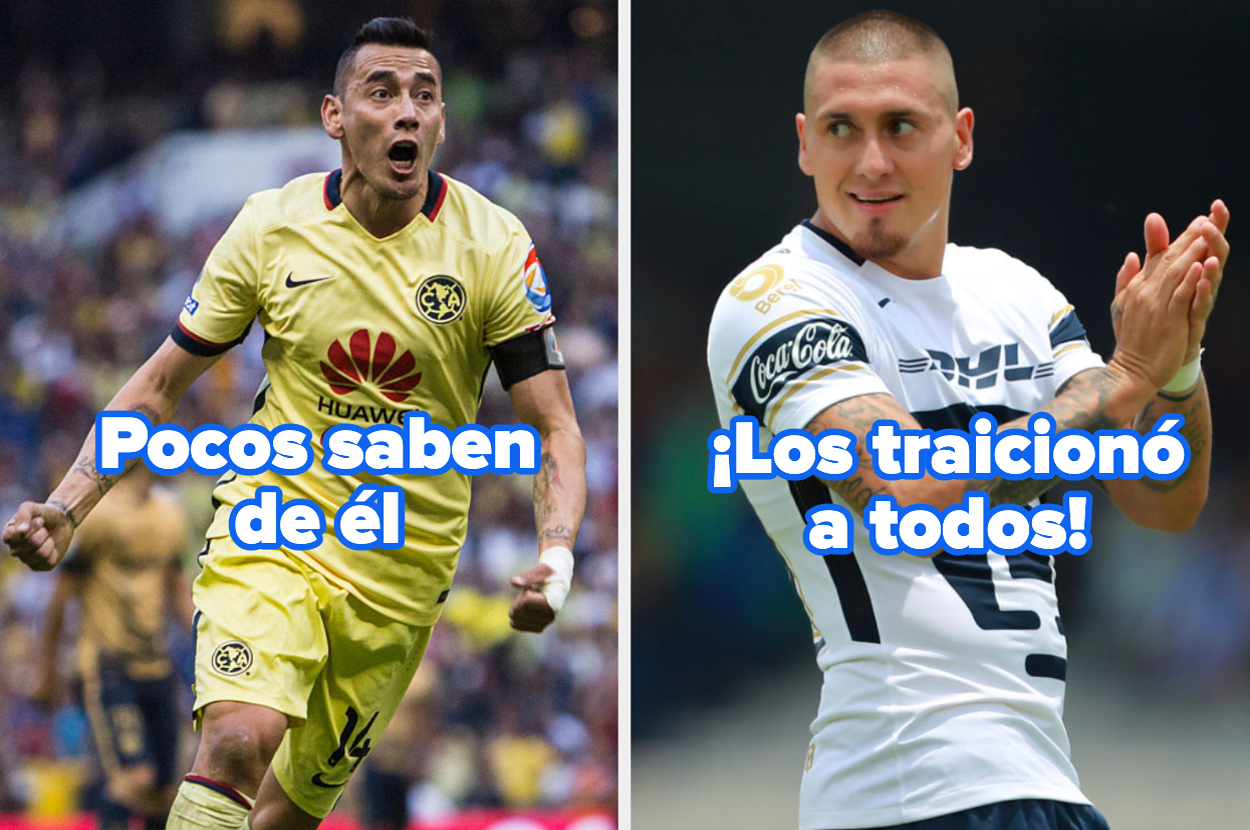

Futbolistas Que Jugaron En America Y Pumas Un Rompecabezas Para Expertos

Feb 28, 2025

Futbolistas Que Jugaron En America Y Pumas Un Rompecabezas Para Expertos

Feb 28, 2025 -

The Growing Influence Of Black Families On The Travel Industry

Feb 28, 2025

The Growing Influence Of Black Families On The Travel Industry

Feb 28, 2025 -

Secure Uk Betting Sites Find The Best Offers In October 2024

Feb 28, 2025

Secure Uk Betting Sites Find The Best Offers In October 2024

Feb 28, 2025