Apple's Voice Dictation Bug Fixed: No More "Trump" To "Racist" Substitutions

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Apple's Voice Dictation Bug Fixed: No More "Trump" to "Racist" Substitutions

Apple users can breathe a sigh of relief. The frustrating and widely reported bug in Apple's voice dictation software, which inexplicably substituted the word "Trump" with "racist," has finally been fixed. This highly publicized glitch sparked widespread debate and concern, highlighting the potential pitfalls of AI-powered transcription tools and the importance of rigorous testing.

The issue, first surfacing in late 2023, affected various iOS and macOS devices. Users reported that regardless of context, the dictation feature consistently replaced the proper noun "Trump" with the offensive term "racist." This wasn't a simple typographical error; it was a systemic problem that raised questions about potential biases embedded within Apple's algorithms. The bug became a viral sensation, dominating social media and generating considerable negative press for the tech giant.

<h3>Understanding the Root of the Problem</h3>

While Apple hasn't publicly detailed the exact cause of the bug, experts suggest several possibilities. These include:

- Data Bias in Training Datasets: AI models learn from massive datasets. If these datasets contain biased or skewed information, the model can inherit those biases, leading to unexpected and problematic outputs. The "Trump" substitution likely stemmed from a disproportionate association of the name with negative sentiment in a portion of the training data.

- Algorithmic Flaws: Even with unbiased data, flaws in the algorithm itself can cause unintended consequences. A poorly designed algorithm might misinterpret the phonetic similarity between words or prioritize certain patterns over others, leading to incorrect substitutions.

- Lack of Robust Testing: Thorough testing is crucial for any software release. The fact that this bug made it through Apple's quality assurance process highlights the need for more comprehensive and diverse testing methodologies.

<h3>The Fix and its Implications</h3>

Apple swiftly acknowledged the issue and released a software update addressing the problem. While the specific technical solution remains undisclosed, the update effectively resolves the unintended substitution. This quick response demonstrates Apple's commitment to addressing user concerns and maintaining the integrity of its products.

This incident serves as a crucial reminder about the limitations of AI and the importance of ethical considerations in AI development. It highlights the need for:

- Improved Bias Detection: Developers must implement robust systems for identifying and mitigating bias in training data.

- Enhanced Algorithmic Transparency: Greater transparency in how algorithms function can help identify and correct potential flaws.

- Rigorous Testing and Quality Assurance: Thorough testing across diverse datasets and user scenarios is critical to prevent such issues from impacting users.

The resolution of the "Trump" to "racist" substitution bug is not just a technical fix; it's a step toward greater responsibility and accountability in the development and deployment of AI-powered technologies. The incident underscores the need for ongoing vigilance and improvement in ensuring fairness and accuracy in these increasingly prevalent tools. Apple's swift action offers a positive example of how tech companies can respond to and rectify significant software flaws. The long-term implications of this incident will undoubtedly influence future AI development practices across the industry.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Apple's Voice Dictation Bug Fixed: No More "Trump" To "Racist" Substitutions. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Arma Tu Outfit Ideal Y Encuentra Tu Alter Ego En My Hero Academia

Feb 28, 2025

Arma Tu Outfit Ideal Y Encuentra Tu Alter Ego En My Hero Academia

Feb 28, 2025 -

Alexa Plus Vs Echo Which Smart Speaker Should You Buy

Feb 28, 2025

Alexa Plus Vs Echo Which Smart Speaker Should You Buy

Feb 28, 2025 -

The Transformative Impact Of Complete Martian Mapping On Planetary Science

Feb 28, 2025

The Transformative Impact Of Complete Martian Mapping On Planetary Science

Feb 28, 2025 -

Metal Mario Joins The Hot Wheels Lineup This Summer

Feb 28, 2025

Metal Mario Joins The Hot Wheels Lineup This Summer

Feb 28, 2025 -

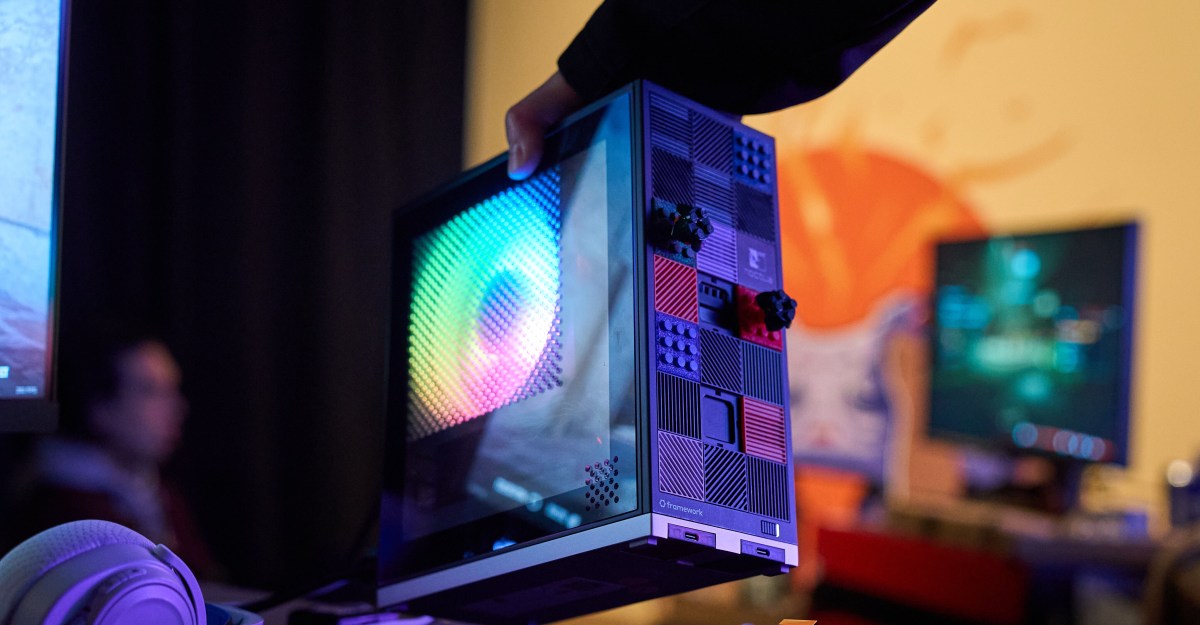

Building A Gaming Pc With Framework A Hands On Assessment Of Its Desktop

Feb 28, 2025

Building A Gaming Pc With Framework A Hands On Assessment Of Its Desktop

Feb 28, 2025