Are AI Chatbots Being Used To Facilitate Crimes?

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Are AI Chatbots Being Used to Facilitate Crimes? The Emerging Threat

The rapid advancement of artificial intelligence (AI) has brought about incredible innovations, but it also presents unforeseen challenges. One growing concern is the potential misuse of AI chatbots to facilitate various crimes. While AI offers incredible benefits, its accessibility also makes it a tool for malicious actors. This article explores the emerging threat of AI chatbots being used for criminal activities, examining the methods employed and the potential consequences.

The Dark Side of Conversational AI:

AI chatbots, designed for helpful interactions, are increasingly sophisticated. Their ability to mimic human conversation makes them surprisingly effective tools for criminals. This capability allows malicious users to leverage them in several ways:

-

Scamming and Phishing: AI chatbots can be programmed to impersonate individuals or organizations, creating highly convincing phishing scams. These sophisticated bots can tailor their responses to individual victims, increasing the likelihood of success. This bypasses many traditional anti-phishing measures.

-

Spread of Misinformation and Disinformation: The ability to generate vast quantities of text quickly makes AI chatbots ideal for spreading false narratives and propaganda. This can be used to manipulate public opinion, sow discord, and even influence elections. The speed and scale at which this can occur is alarming.

-

Cyberstalking and Harassment: AI chatbots can be used to automate harassment campaigns, sending abusive messages en masse or creating personalized attacks. The anonymity offered by these tools makes it difficult to trace perpetrators and hold them accountable.

-

Facilitating Illegal Transactions: Dark web marketplaces are increasingly utilizing AI chatbots to streamline illegal transactions, offering automated customer service and ensuring anonymity. This can facilitate the sale of illicit goods and services, including drugs, weapons, and stolen data.

-

Generating Fraudulent Content: From creating fake reviews to generating convincing fake news articles, AI chatbots can be used to manipulate online platforms and influence public perception. This can damage reputations, inflate stock prices, or even incite violence.

Identifying and Combating the Threat:

Addressing the criminal use of AI chatbots requires a multi-pronged approach:

-

Improved AI Detection Technologies: Developing sophisticated algorithms capable of identifying AI-generated content is crucial. This involves creating systems that can analyze linguistic patterns, stylistic choices, and contextual inconsistencies to flag potentially malicious AI interactions.

-

Enhanced Cybersecurity Measures: Strengthening online security measures is paramount. This includes implementing more robust authentication protocols and developing more sophisticated anti-phishing techniques. User education about spotting AI-driven scams is also essential.

-

Collaboration Between Law Enforcement and Tech Companies: Effective collaboration between law enforcement agencies and AI developers is crucial for sharing information, tracking malicious activities, and developing effective countermeasures.

-

Ethical AI Development and Deployment: Promoting ethical AI development practices, including transparency and accountability, is essential. AI developers must consider the potential for misuse and incorporate safeguards to prevent malicious applications.

The Future of AI and Crime:

The use of AI chatbots in criminal activities is a developing area. As AI technology becomes more sophisticated, the potential for misuse will only grow. Staying informed about these threats and supporting the development of effective countermeasures is crucial for protecting individuals and society as a whole. The future of AI hinges on our collective ability to harness its power for good while mitigating its inherent risks.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Are AI Chatbots Being Used To Facilitate Crimes?. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Louise Redknapp On Moving On Love And Reconciliation After Jamie Split And Band Feud

May 25, 2025

Louise Redknapp On Moving On Love And Reconciliation After Jamie Split And Band Feud

May 25, 2025 -

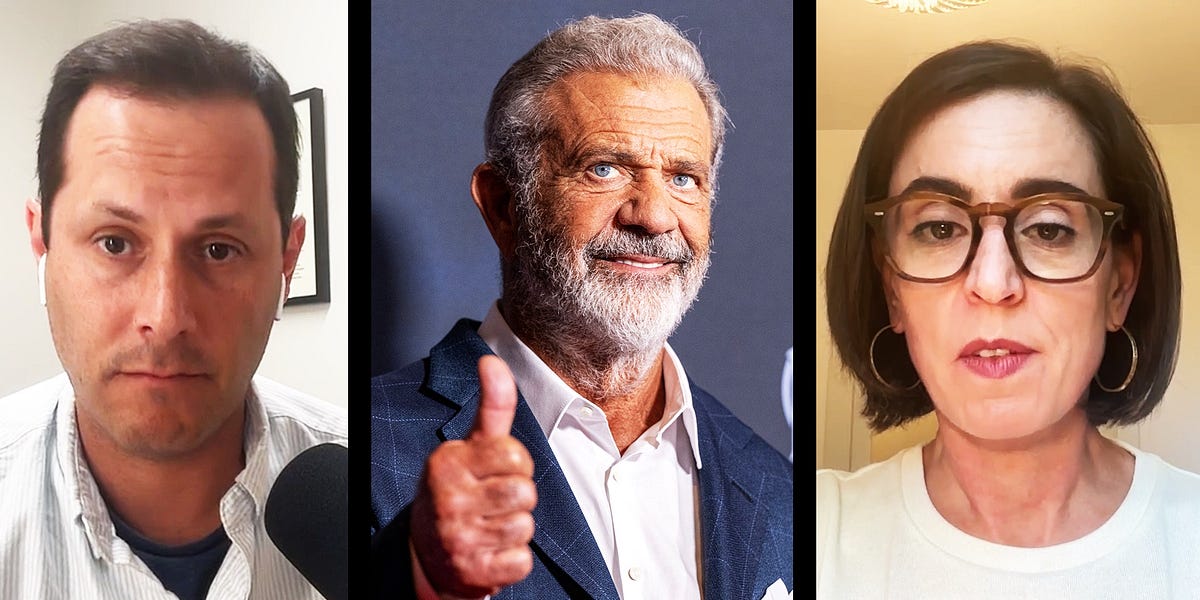

White House Insider Why Trump Fired Her Over Mel Gibsons Guns

May 25, 2025

White House Insider Why Trump Fired Her Over Mel Gibsons Guns

May 25, 2025 -

From 28 Days Later To 28 Years Later Evolution Of The Zombie Apocalypse Film

May 25, 2025

From 28 Days Later To 28 Years Later Evolution Of The Zombie Apocalypse Film

May 25, 2025 -

Auckland Fc Vs Melbourne Victory Team News Lineups And Expert Match Prediction

May 25, 2025

Auckland Fc Vs Melbourne Victory Team News Lineups And Expert Match Prediction

May 25, 2025 -

Planning Your Trip To Birmingham Pride 2025 Check These Road Closures And Transport Updates

May 25, 2025

Planning Your Trip To Birmingham Pride 2025 Check These Road Closures And Transport Updates

May 25, 2025

Latest Posts

-

Putins Russia What A New Stalin Statue Reveals

May 25, 2025

Putins Russia What A New Stalin Statue Reveals

May 25, 2025 -

The Current State Of Bitcoin And Ethereum Supply Shock Analysis

May 25, 2025

The Current State Of Bitcoin And Ethereum Supply Shock Analysis

May 25, 2025 -

Sheffield United Game Leaves Sunderland Player Fighting For Breath Needing Oxygen

May 25, 2025

Sheffield United Game Leaves Sunderland Player Fighting For Breath Needing Oxygen

May 25, 2025 -

How People Are Exploiting Ai Chatbots For Illegal Activities

May 25, 2025

How People Are Exploiting Ai Chatbots For Illegal Activities

May 25, 2025 -

Fujifilm X H2 First Impressions Fun Intuitive And Engaging

May 25, 2025

Fujifilm X H2 First Impressions Fun Intuitive And Engaging

May 25, 2025