Are AI Models A Security Threat To Web3? Exploring The Risks

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Are AI Models a Security Threat to Web3? Exploring the Risks

The decentralized dream of Web3, built on blockchain technology and promises of transparency and security, faces a new, sophisticated threat: Artificial Intelligence (AI). While AI offers numerous potential benefits for Web3, its inherent vulnerabilities and potential for malicious use pose significant security risks. This article explores these emerging threats and examines how the Web3 community is striving to mitigate them.

The Allure and the Threat of AI in Web3

AI's potential applications in Web3 are vast, ranging from improved smart contract auditing and fraud detection to enhanced user experience through personalized services. However, this very power can be weaponized. The decentralized nature of Web3, while intended to enhance security, also presents unique challenges when it comes to defending against AI-driven attacks.

Key Security Risks Posed by AI:

-

Sophisticated Phishing and Social Engineering: AI can be used to create highly convincing phishing scams, exploiting human psychology to trick users into revealing their private keys or seed phrases. These attacks could be personalized and highly targeted, making them incredibly difficult to detect.

-

Automated Attacks on Smart Contracts: AI can automate the discovery and exploitation of vulnerabilities in smart contracts. This allows attackers to launch coordinated attacks at scale, significantly increasing the risk of financial losses and data breaches. This is especially dangerous given the often irreversible nature of blockchain transactions.

-

Sybil Attacks Amplified: AI can be used to create vast networks of fake accounts (Sybil attacks), significantly amplifying their impact on decentralized systems. This can manipulate voting processes, distort market prices, and undermine the integrity of Web3 platforms.

-

Deepfakes and Identity Theft: AI-powered deepfake technology can create realistic fake videos and audio recordings, making it easier for malicious actors to impersonate individuals and gain unauthorized access to accounts or funds.

-

AI-powered Malware and Viruses: The development of AI-powered malware and viruses tailored to exploit Web3 vulnerabilities poses a serious threat. These sophisticated attacks can be difficult to detect and remove, potentially leading to significant damage.

Mitigation Strategies and Future Considerations:

The Web3 community is actively working on solutions to address these risks. Key strategies include:

-

Enhanced Smart Contract Security Audits: More rigorous auditing processes, possibly incorporating AI-powered tools for vulnerability detection, are crucial.

-

Improved User Education: Educating users about the risks of AI-powered attacks is paramount to preventing successful phishing scams and social engineering attempts.

-

Development of AI-powered Security Tools: Developing AI tools to detect and prevent AI-driven attacks is a key area of research and development. This includes creating robust anti-phishing and anti-malware solutions specifically designed for Web3.

-

Blockchain-based Identity Verification: Implementing strong identity verification systems can mitigate the risks associated with deepfakes and Sybil attacks.

-

Collaboration and Open Source Security: Collaboration among developers, researchers, and security experts is vital to sharing knowledge and developing effective countermeasures.

Conclusion:

The integration of AI into Web3 presents a double-edged sword. While AI offers incredible potential benefits, it also introduces significant security challenges. The Web3 ecosystem must proactively address these risks through improved security protocols, user education, and the development of innovative defense mechanisms. Only through a combined effort can the decentralized web truly realize its promise of security and transparency in the age of advanced AI. The future of Web3 hinges on effectively navigating this complex landscape and staying ahead of the ever-evolving threats posed by AI.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Are AI Models A Security Threat To Web3? Exploring The Risks. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Revolutionizing Payments Ethena Uses Ton For Dollar Transactions Within Telegram

May 03, 2025

Revolutionizing Payments Ethena Uses Ton For Dollar Transactions Within Telegram

May 03, 2025 -

The Return Of Trumpian Politics A Pre Election Day Analysis

May 03, 2025

The Return Of Trumpian Politics A Pre Election Day Analysis

May 03, 2025 -

Met Office Predicts Thundery Showers For First Half Of May

May 03, 2025

Met Office Predicts Thundery Showers For First Half Of May

May 03, 2025 -

People Are Real The Impact Of Authenticity On Social Media Engagement

May 03, 2025

People Are Real The Impact Of Authenticity On Social Media Engagement

May 03, 2025 -

Champion Lopez Edges Out Barboza In Thrilling Times Square Bout

May 03, 2025

Champion Lopez Edges Out Barboza In Thrilling Times Square Bout

May 03, 2025

Latest Posts

-

Why The 2018 Comedy Sequel Falls Flat

May 03, 2025

Why The 2018 Comedy Sequel Falls Flat

May 03, 2025 -

Link11s Rebranding Combining Three Brands For Enhanced User Experience

May 03, 2025

Link11s Rebranding Combining Three Brands For Enhanced User Experience

May 03, 2025 -

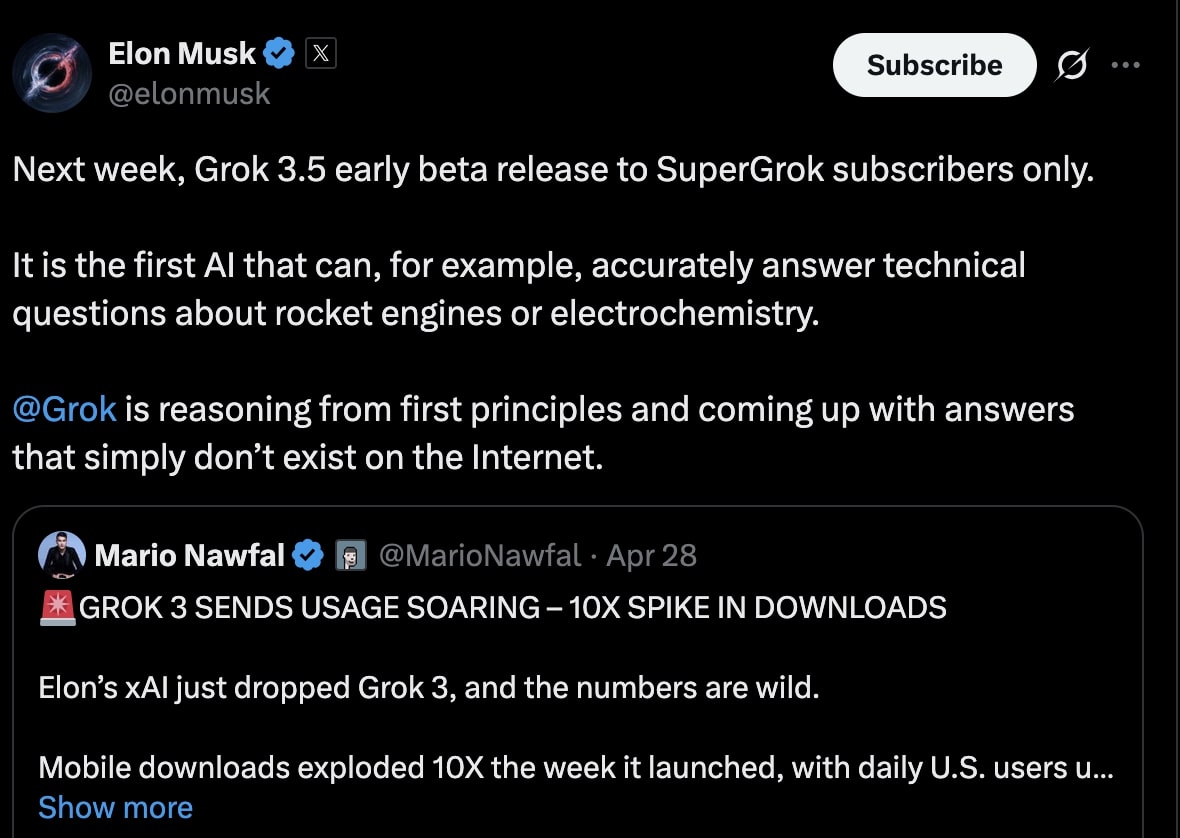

Next Week X Ais Grok 3 5 Update For Super Grok Subscribers

May 03, 2025

Next Week X Ais Grok 3 5 Update For Super Grok Subscribers

May 03, 2025 -

Singapore May Weather 34 C Heatwave Thunderstorms And Rainfall Predicted

May 03, 2025

Singapore May Weather 34 C Heatwave Thunderstorms And Rainfall Predicted

May 03, 2025 -

New Report Exposes Tensions In Fettermans Marriage And Questions His Fitness For Office

May 03, 2025

New Report Exposes Tensions In Fettermans Marriage And Questions His Fitness For Office

May 03, 2025