Benchmarking Claude 4: How Anthropic's New Models Stack Up

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Benchmarking Claude 4: How Anthropic's New Models Stack Up

Anthropic, the AI safety and research company, has unveiled its latest large language model (LLM), Claude 4. This release marks a significant step forward in the rapidly evolving landscape of AI, prompting immediate questions about its capabilities and how it compares to existing models. This article dives deep into the benchmarks and performance of Claude 4, analyzing its strengths, weaknesses, and overall position within the competitive AI market.

Claude 4: A Closer Look at the Enhancements

Claude 4 boasts several key improvements over its predecessor, Claude 2. Anthropic has focused on enhancing reasoning abilities, factual accuracy, and overall helpfulness. While specific details regarding the model's architecture and training data remain proprietary, independent benchmarks reveal substantial progress across various tasks.

Benchmarking Claude 4 Against Leading LLMs

Several independent evaluations have compared Claude 4 to leading LLMs like GPT-4 and PaLM 2. These benchmarks typically involve a range of tasks, including:

-

Reasoning and Problem Solving: Claude 4 shows significant improvement in complex reasoning tasks, outperforming Claude 2 and demonstrating competitive performance against GPT-4 in certain scenarios. This improvement is largely attributed to advancements in the model's architecture and training methodologies.

-

Factual Accuracy: A crucial aspect of any LLM, factual accuracy has been a key focus for Anthropic. Initial findings suggest a noticeable reduction in hallucinations (fabricating information) compared to previous iterations, bringing it closer to the accuracy levels of GPT-4.

-

Coding Proficiency: Claude 4 demonstrates improved coding skills, exhibiting a higher success rate in generating correct and efficient code across multiple programming languages. This enhancement is vital for developers and programmers seeking reliable AI assistance.

-

Toxicity and Bias: Anthropic has consistently prioritized mitigating bias and toxicity in its models. While independent assessments are still ongoing, early indications suggest Claude 4 maintains a low level of harmful outputs, aligning with Anthropic's commitment to responsible AI development.

Strengths and Weaknesses of Claude 4

While Claude 4 showcases impressive advancements, it's essential to acknowledge its limitations:

-

Context Window: While improved from Claude 2, the context window (the amount of text the model can process at once) might still be a limiting factor compared to some competitors.

-

Cost: Access to Claude 4 might be more expensive than some open-source alternatives, making it less accessible to individuals and smaller organizations.

-

Availability: Currently, access to Claude 4 is primarily through Anthropic's APIs and partnerships, limiting widespread independent testing and experimentation.

The Future of Claude and the LLM Landscape

The release of Claude 4 represents a significant contribution to the LLM landscape. Its improved reasoning abilities, increased factual accuracy, and focus on safety make it a strong contender amongst leading models. However, the ongoing competition and rapid advancements in AI mean that continuous improvement and innovation are crucial for Anthropic to maintain its position at the forefront of this rapidly evolving field. Further independent benchmarks and wider access to Claude 4 will be vital in fully understanding its long-term impact and potential. The race to develop more powerful and responsible AI models is far from over, and Claude 4 is undoubtedly a significant milestone in this journey.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Benchmarking Claude 4: How Anthropic's New Models Stack Up. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Singer Billy Joel Cancels Tour Dates Due To Undisclosed Brain Condition

May 25, 2025

Singer Billy Joel Cancels Tour Dates Due To Undisclosed Brain Condition

May 25, 2025 -

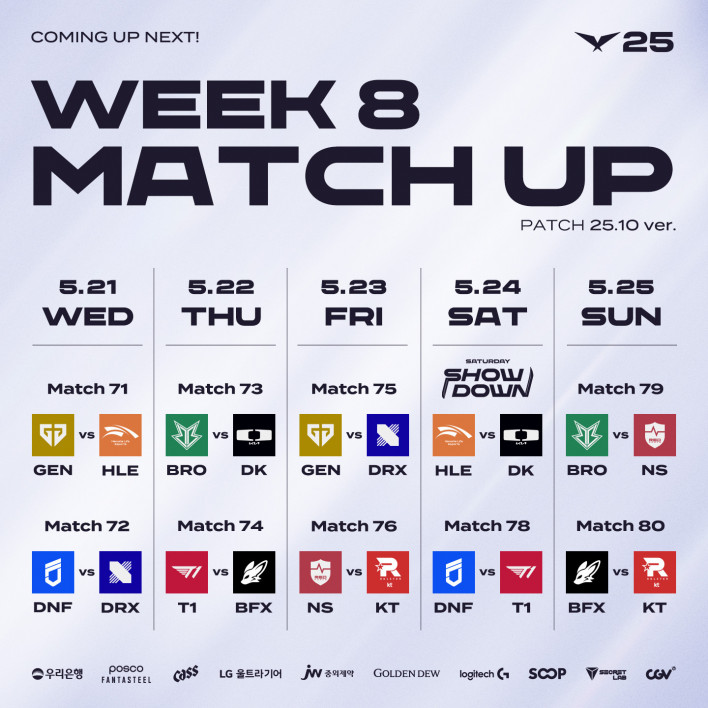

League Of Legends Hle Faces Gen G And Dplus Kia In Crucial Patch 25 10 Matches

May 25, 2025

League Of Legends Hle Faces Gen G And Dplus Kia In Crucial Patch 25 10 Matches

May 25, 2025 -

Bitcoins Legal Tender Status A Comparison Of El Salvador And The United States

May 25, 2025

Bitcoins Legal Tender Status A Comparison Of El Salvador And The United States

May 25, 2025 -

18 Year Olds And Zombies Hulus New Horror Movie

May 25, 2025

18 Year Olds And Zombies Hulus New Horror Movie

May 25, 2025 -

New Book Details Kamala Harris And Anderson Coopers Post Debate Clash Following Biden Grilling

May 25, 2025

New Book Details Kamala Harris And Anderson Coopers Post Debate Clash Following Biden Grilling

May 25, 2025

Latest Posts

-

Brasil Perspectivas Economicas Com O Foco No Copom Ipca E Dados Da China

May 25, 2025

Brasil Perspectivas Economicas Com O Foco No Copom Ipca E Dados Da China

May 25, 2025 -

Malaysias Future Rafizis Departure And The Ongoing Quest For Progress

May 25, 2025

Malaysias Future Rafizis Departure And The Ongoing Quest For Progress

May 25, 2025 -

Both Feet Left The Ground Nrl Match Review To Examine Panthers Tackle

May 25, 2025

Both Feet Left The Ground Nrl Match Review To Examine Panthers Tackle

May 25, 2025 -

High Yield Energy Cibc Analysts Best Picks In Infrastructure And Power

May 25, 2025

High Yield Energy Cibc Analysts Best Picks In Infrastructure And Power

May 25, 2025 -

De Boers Approach Oilers Stars Matchup Preview And Analysis

May 25, 2025

De Boers Approach Oilers Stars Matchup Preview And Analysis

May 25, 2025