Cerebras WSE-3 And Nvidia B200: Which AI Superchip Reigns Supreme?

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Cerebras WSE-3 vs. Nvidia B200: The AI Superchip Showdown

The race for AI supremacy is heating up, and two titans are vying for the crown: Cerebras's WSE-3 and Nvidia's B200. Both boast impressive specifications and promise to revolutionize large-language model (LLM) training and inference, but which chip truly reigns supreme? This in-depth comparison will delve into the key features, performance benchmarks, and potential applications of these groundbreaking AI superchips.

Understanding the Contenders:

Both the Cerebras WSE-3 and Nvidia B200 represent significant leaps forward in AI chip technology. However, their approaches differ significantly.

Cerebras WSE-3: The Monolithic Marvel

The WSE-3 is a single, massive chip containing 1.2 trillion transistors—a staggering feat of engineering. This monolithic design allows for unprecedented levels of on-chip memory and bandwidth, minimizing data transfer bottlenecks that plague other architectures. This translates to faster training times and improved efficiency for large-scale AI models. Key features include:

- Massive on-chip memory: Minimizes data movement, accelerating training.

- High bandwidth interconnect: Enables seamless communication between processing units.

- Specialized instructions: Optimized for AI workloads, boosting performance.

Nvidia B200: The Power of Interconnectivity

Nvidia's B200 takes a different approach. While not a single monolithic chip like the WSE-3, it leverages the power of interconnected GPUs to achieve similar, and in some cases, exceeding performance. This modularity offers scalability and flexibility, allowing users to customize their AI infrastructure based on specific needs. Key features include:

- NVLink interconnect: High-speed interconnect between GPUs for efficient data transfer.

- Scalability: Easily scale performance by adding more GPUs.

- Mature software ecosystem: Benefits from Nvidia's extensive CUDA software stack.

Performance Benchmarks: A Head-to-Head Comparison

Direct, apples-to-apples performance comparisons are challenging due to the varying architectures and benchmarking methodologies. However, early indications suggest both chips offer exceptional performance for LLMs and other AI tasks. Cerebras emphasizes the WSE-3's ability to train massive models faster and more efficiently due to its on-chip memory, while Nvidia highlights the B200's scalability and the maturity of its software ecosystem. Independent benchmarks are crucial for a definitive conclusion, and we anticipate further data emerging in the coming months.

Applications and Use Cases:

Both chips are poised to revolutionize various AI applications, including:

- Large Language Model (LLM) Training: Training and deploying state-of-the-art LLMs, pushing the boundaries of AI capabilities.

- Drug Discovery and Development: Accelerating the process of identifying and developing new drugs and therapies.

- Climate Modeling and Simulation: Improving the accuracy and efficiency of climate models.

- Financial Modeling: Enabling more sophisticated and accurate financial modeling techniques.

The Verdict: A Matter of Perspective

Choosing between the Cerebras WSE-3 and the Nvidia B200 depends on specific needs and priorities. The WSE-3 offers unparalleled on-chip resources, ideal for organizations prioritizing speed and efficiency for specific, very large models. The Nvidia B200, with its scalability and established ecosystem, provides a flexible and powerful solution for a broader range of applications and users. Ultimately, the "best" superchip depends on the user’s individual requirements and budget constraints. As more benchmarks and real-world applications emerge, a clearer picture of the ultimate winner will emerge. This is a rapidly evolving field, and both Cerebras and Nvidia are likely to continue pushing the boundaries of AI hardware innovation.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Cerebras WSE-3 And Nvidia B200: Which AI Superchip Reigns Supreme?. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Jack Brearley And Brodie Palmer Convicted In Cassius Turvey Murder

May 08, 2025

Jack Brearley And Brodie Palmer Convicted In Cassius Turvey Murder

May 08, 2025 -

Record Breaking Gore Final Destination Bloodlines Review

May 08, 2025

Record Breaking Gore Final Destination Bloodlines Review

May 08, 2025 -

Masked Singers Mad Scientist Monster Unmasking The Celebrity

May 08, 2025

Masked Singers Mad Scientist Monster Unmasking The Celebrity

May 08, 2025 -

Binance Listing Ignites Kamino Kmno 30 Day Rally 100 Price Surge

May 08, 2025

Binance Listing Ignites Kamino Kmno 30 Day Rally 100 Price Surge

May 08, 2025 -

Analyzing The Math Mark Daigneaults Perspective On Fouling In Basketball

May 08, 2025

Analyzing The Math Mark Daigneaults Perspective On Fouling In Basketball

May 08, 2025

Latest Posts

-

Nyt Wordle Today Solution And Hints For May 7th Game 1418

May 08, 2025

Nyt Wordle Today Solution And Hints For May 7th Game 1418

May 08, 2025 -

Deadly Courtroom Shooting Suspect Dead After Police Encounter

May 08, 2025

Deadly Courtroom Shooting Suspect Dead After Police Encounter

May 08, 2025 -

Celtics Comeback Bid Falls Short Knicks Two Victories From Conference Finals

May 08, 2025

Celtics Comeback Bid Falls Short Knicks Two Victories From Conference Finals

May 08, 2025 -

Firebirds Prospects Challenges And Opportunities On The Road Ahead

May 08, 2025

Firebirds Prospects Challenges And Opportunities On The Road Ahead

May 08, 2025 -

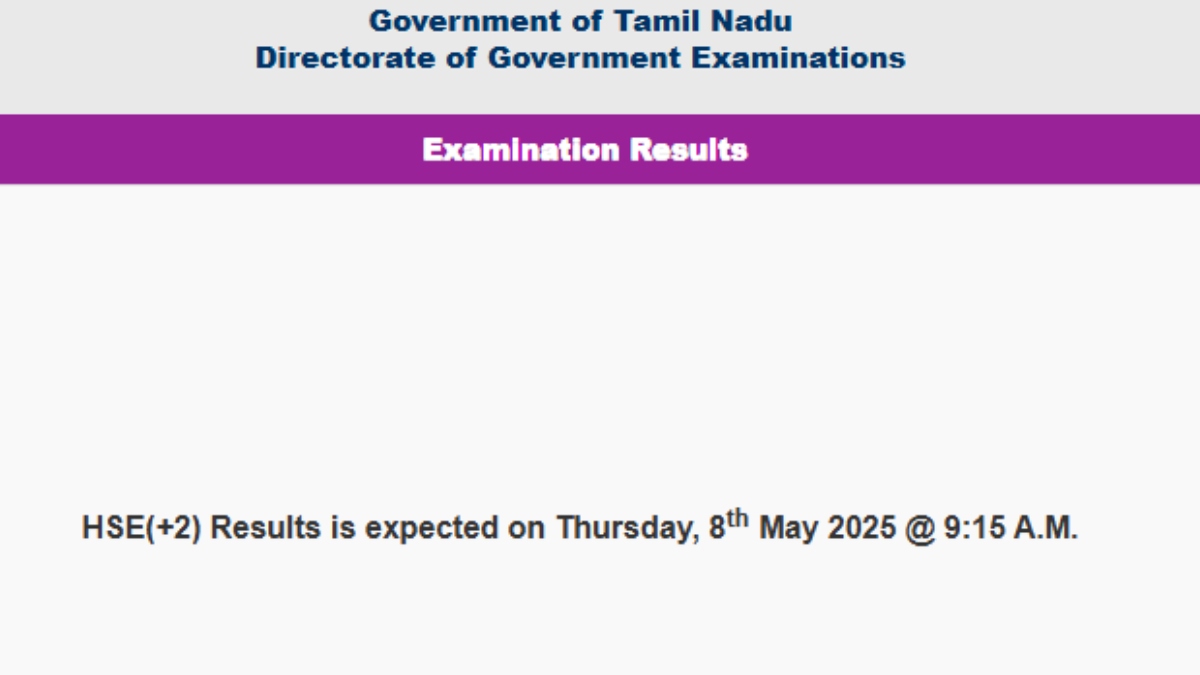

Tn 12th 2 Results 2025 Tamil Nadu Board Hsc Plus Two Exam Results Declared

May 08, 2025

Tn 12th 2 Results 2025 Tamil Nadu Board Hsc Plus Two Exam Results Declared

May 08, 2025