Cerebras WSE-3 Vs. Nvidia B200: A Detailed Comparison Of AI Superchips

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

<h1>Cerebras WSE-3 vs. Nvidia B200: A Detailed Comparison of AI Superchips</h1>

The race to build the most powerful AI superchips is heating up, with two titans, Cerebras and Nvidia, leading the charge. Cerebras' WSE-3 and Nvidia's B200 are both behemoths, promising unprecedented performance for the most demanding AI workloads. But which one reigns supreme? This detailed comparison dives deep into the specifications, architectures, and potential applications of these groundbreaking chips.

<h2>Architectural Differences: A Tale of Two Approaches</h2>

The fundamental difference lies in their architectural philosophies. Nvidia's B200, part of their Hopper architecture, relies on a massively parallel approach using thousands of smaller cores interconnected through NVLink. This interconnectedness allows for efficient data sharing between cores, crucial for complex AI models. Think of it as a highly coordinated army, each soldier (core) playing its part in a larger, synchronized operation.

Cerebras, on the other hand, takes a radically different approach with the WSE-3. It boasts a single, massive wafer-scale engine, a monolithic chip containing millions of cores directly connected to each other. This eliminates the inter-chip communication bottlenecks that plague traditional multi-chip systems, leading to potentially faster processing speeds for certain applications. Imagine it as a single, powerful brain, all processing happening within a unified structure.

<h3>Key Architectural Highlights:</h3>

- Nvidia B200: High core count, interconnected via NVLink, excellent for large-scale parallel processing.

- Cerebras WSE-3: Wafer-scale architecture, minimal inter-chip communication overhead, potentially superior for specific workloads.

<h2>Performance Benchmarks: A Head-to-Head Showdown</h2>

Direct performance comparisons are tricky due to the varied nature of AI workloads and the lack of publicly available benchmarks covering both chips on identical tasks. However, early indications suggest both chips deliver exceptional performance. Cerebras highlights the WSE-3's ability to handle exceptionally large models that would struggle on other architectures due to memory limitations. Nvidia, meanwhile, emphasizes the B200's scalability and adaptability across a broader range of AI applications.

The "best" chip depends heavily on the specific application. For extremely large language models (LLMs) and certain scientific simulations, the WSE-3's massive memory capacity and low communication overhead might provide a significant advantage. For more diverse workloads requiring high throughput and parallel processing, the B200's flexibility and established ecosystem could be more appealing.

<h2>Power Consumption and Cost: The Practical Considerations</h2>

Both chips are power-hungry beasts. Precise power consumption figures are not yet publicly released by either company, but it's safe to assume both necessitate significant cooling solutions and substantial power infrastructure. The cost of acquiring and operating either chip will be substantial, limiting their accessibility to large research institutions, corporations, and high-performance computing centers.

<h2>Applications and Use Cases: Where Each Chip Excels</h2>

- Cerebras WSE-3: Ideal for computationally intensive tasks like large language model training, drug discovery, and genomics research where massive model sizes and low communication latency are paramount.

- Nvidia B200: Well-suited for a broader range of AI applications, including image recognition, natural language processing, recommendation systems, and various scientific simulations. Its strong ecosystem and established software support provide a significant advantage.

<h2>Conclusion: No Clear Winner, but Distinct Strengths</h2>

The Cerebras WSE-3 and Nvidia B200 represent two distinct approaches to building the next generation of AI superchips. There's no single "winner" – the best choice depends entirely on the specific application requirements and available resources. Cerebras offers a unique architecture optimized for extreme scale and low communication latency, while Nvidia provides a more versatile and widely supported solution. As both technologies mature and more benchmarks emerge, a clearer picture of their comparative strengths and weaknesses will undoubtedly become apparent. The future of AI computing is bright, and these two powerful contenders are driving much of its innovation.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Cerebras WSE-3 Vs. Nvidia B200: A Detailed Comparison Of AI Superchips. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Kamino Kmno Post Binance Listing Surge A 30 Day Rally Analysis

May 08, 2025

Kamino Kmno Post Binance Listing Surge A 30 Day Rally Analysis

May 08, 2025 -

Firebirds Playoff Push Key Kraken Prospects To Follow

May 08, 2025

Firebirds Playoff Push Key Kraken Prospects To Follow

May 08, 2025 -

Stephen Curry Exits Game 1 With Hamstring Strain

May 08, 2025

Stephen Curry Exits Game 1 With Hamstring Strain

May 08, 2025 -

Road To Success Firebirds Prospects For The Season

May 08, 2025

Road To Success Firebirds Prospects For The Season

May 08, 2025 -

George Pickens Trade Rumors Heat Up Potential Destinations And Impact

May 08, 2025

George Pickens Trade Rumors Heat Up Potential Destinations And Impact

May 08, 2025

Latest Posts

-

Solve Nyt Wordle 1418 May 7 Clues And The Answer

May 08, 2025

Solve Nyt Wordle 1418 May 7 Clues And The Answer

May 08, 2025 -

Dbs Stock Price Jumps After Reporting Better Than Expected Earnings

May 08, 2025

Dbs Stock Price Jumps After Reporting Better Than Expected Earnings

May 08, 2025 -

Cassius Turvey Murder Trial Brearley And Palmer Receive Guilty Verdict

May 08, 2025

Cassius Turvey Murder Trial Brearley And Palmer Receive Guilty Verdict

May 08, 2025 -

Hyeseong Kims On Base Strategy Insights From A 10 1 Win

May 08, 2025

Hyeseong Kims On Base Strategy Insights From A 10 1 Win

May 08, 2025 -

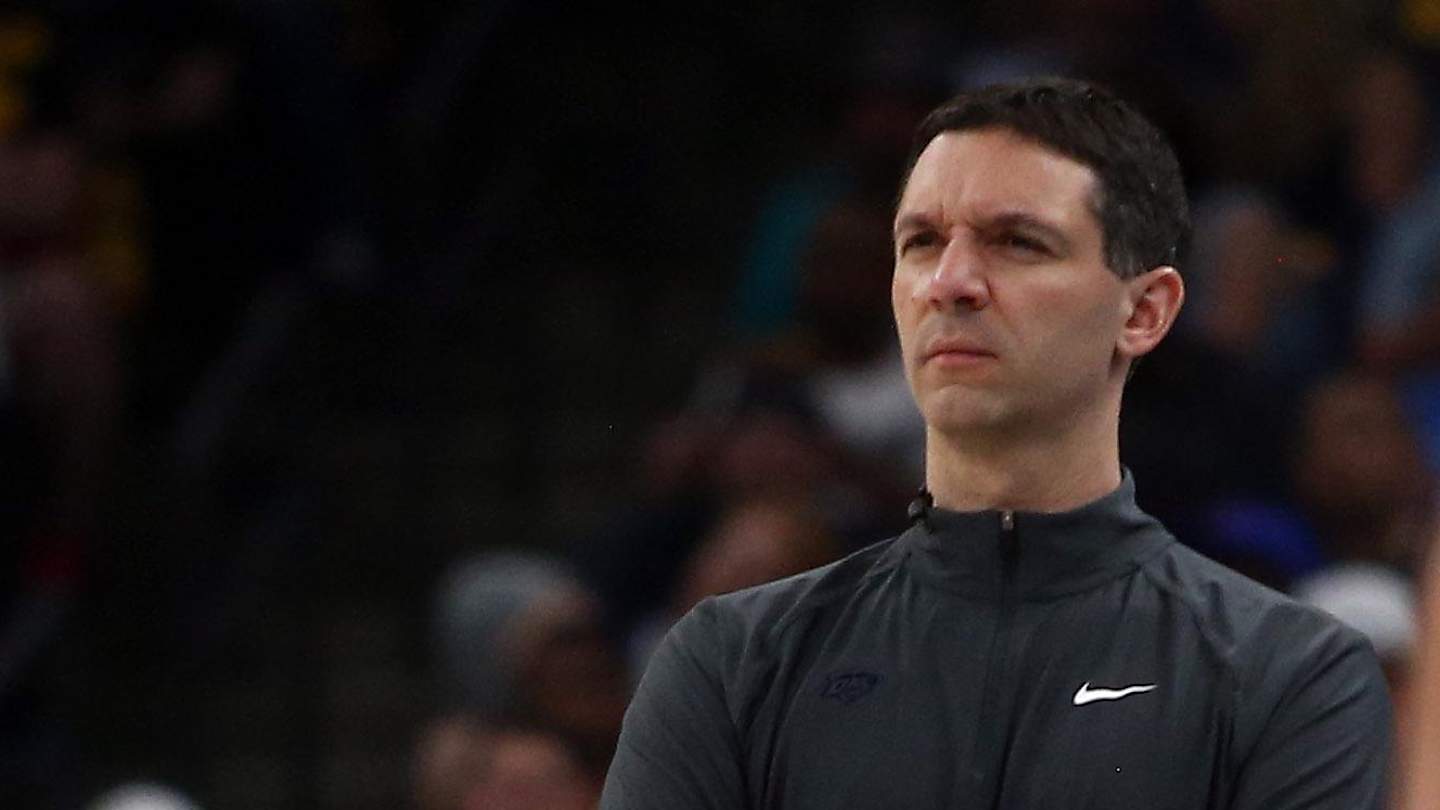

Nba Strategy Mark Daigneaults Perspective On Fouling And 3 Pointers

May 08, 2025

Nba Strategy Mark Daigneaults Perspective On Fouling And 3 Pointers

May 08, 2025