ChatGPT's Growing Power: The Problem Of Increasingly Realistic Hallucinations

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

ChatGPT's Growing Power: The Problem of Increasingly Realistic Hallucinations

ChatGPT, the revolutionary AI chatbot developed by OpenAI, has captivated the world with its ability to generate human-quality text. From crafting creative stories to answering complex questions, its capabilities are constantly expanding. However, this powerful tool comes with a significant and increasingly concerning downside: the production of increasingly realistic hallucinations. These aren't simple factual errors; they are convincing fabrications presented with unwavering confidence, posing a serious challenge to the responsible use of AI.

The Nature of ChatGPT Hallucinations

What exactly constitutes a "hallucination" in the context of AI? It's not the AI experiencing sensory perception like a human; instead, it refers to the generation of entirely false information presented as fact. These hallucinations can range from minor inaccuracies to elaborate, completely fabricated narratives. The problem is amplified by ChatGPT's increasing sophistication: the more realistic and fluent the text, the harder it becomes to distinguish truth from fiction. This is particularly worrying when the chatbot is used for research, education, or even creative writing, where the acceptance of false information as truth can have serious consequences.

Why are Hallucinations Becoming More Realistic?

The root of the problem lies in the nature of large language models (LLMs) like ChatGPT. These models are trained on massive datasets of text and code, learning to predict the next word in a sequence based on statistical probabilities. They don't "understand" the meaning of the text; they simply identify patterns and relationships. This can lead to the generation of grammatically correct and contextually appropriate sentences that are nonetheless entirely fabricated. As these models become larger and more data-rich, their ability to generate convincingly realistic, yet false, information increases proportionally.

The Dangers of Believing ChatGPT's Fabrications

The implications of increasingly realistic ChatGPT hallucinations are far-reaching:

- Misinformation and Disinformation: The spread of false information generated by AI poses a significant threat to public discourse and trust in information sources. The ease with which convincing falsehoods can be created and disseminated is alarming.

- Academic Integrity: Students relying on ChatGPT for research could inadvertently plagiarize or submit work containing fabricated information, leading to academic misconduct.

- Professional Risks: Professionals using ChatGPT for tasks requiring factual accuracy, such as legal research or medical diagnosis, could make critical errors based on the chatbot's hallucinations.

- Erosion of Trust in AI: The prevalence of hallucinations undermines public trust in AI technology, hindering its potential benefits across various sectors.

Mitigating the Risks of ChatGPT Hallucinations

Addressing the issue of AI hallucinations requires a multi-pronged approach:

- Improved Model Training: Researchers are actively working on improving LLM training methods to reduce the likelihood of hallucinations. This includes incorporating techniques to better assess factual accuracy and penalize the generation of false information.

- Enhanced Fact-Checking Mechanisms: Developing tools and techniques to automatically detect and flag potentially false information generated by AI is crucial. This could involve cross-referencing information with reliable sources and incorporating human-in-the-loop verification.

- User Education and Awareness: Educating users about the limitations of AI chatbots and the potential for hallucinations is essential. Users need to be aware that they should always critically evaluate the information provided by AI and verify it with trusted sources.

The Future of Responsible AI Development

The increasing sophistication of AI models like ChatGPT presents both incredible opportunities and significant challenges. While the potential benefits are undeniable, addressing the problem of realistic hallucinations is critical for ensuring the responsible and ethical development and deployment of AI technology. The future of AI hinges on our ability to build systems that are not only powerful but also trustworthy and reliable. The ongoing dialogue and collaborative efforts of researchers, developers, and policymakers are vital in navigating this complex landscape and harnessing the transformative power of AI while mitigating its potential risks.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on ChatGPT's Growing Power: The Problem Of Increasingly Realistic Hallucinations. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Nasas 7 Billion Problem Inefficiency And Wasteful Spending

May 08, 2025

Nasas 7 Billion Problem Inefficiency And Wasteful Spending

May 08, 2025 -

Behind The Scenes The Making Of Mon Mothmas Defining Andor Speech

May 08, 2025

Behind The Scenes The Making Of Mon Mothmas Defining Andor Speech

May 08, 2025 -

Mad Scientist Monsters Identity Revealed On The Masked Singer Finale

May 08, 2025

Mad Scientist Monsters Identity Revealed On The Masked Singer Finale

May 08, 2025 -

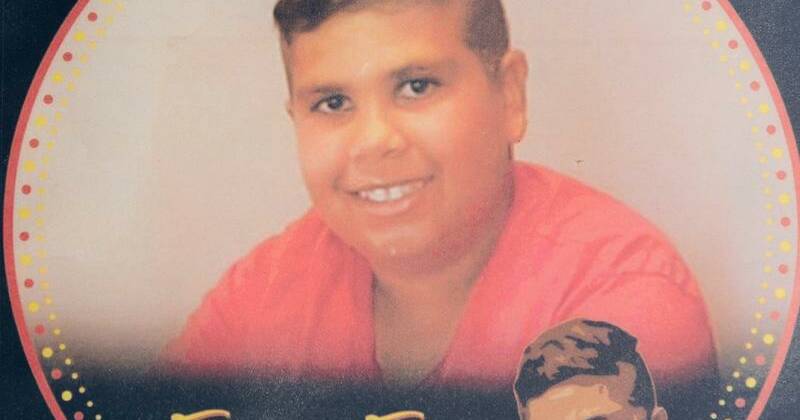

Jack Brearley And Brodie Palmer Convicted In Cassius Turvey Killing

May 08, 2025

Jack Brearley And Brodie Palmer Convicted In Cassius Turvey Killing

May 08, 2025 -

Interest Rate Freeze Greg Jericho On The Reserve Banks April Decision And Its Consequences

May 08, 2025

Interest Rate Freeze Greg Jericho On The Reserve Banks April Decision And Its Consequences

May 08, 2025

Latest Posts

-

Guilty Verdict Reached In Indigenous Teens Murder Case

May 08, 2025

Guilty Verdict Reached In Indigenous Teens Murder Case

May 08, 2025 -

Pakistans Media Pushback Investigating Indian Allegations In Azad Kashmir

May 08, 2025

Pakistans Media Pushback Investigating Indian Allegations In Azad Kashmir

May 08, 2025 -

Unity Cup Kano Pillars Forward Musa Gets Super Eagles Nod

May 08, 2025

Unity Cup Kano Pillars Forward Musa Gets Super Eagles Nod

May 08, 2025 -

Travel Plans Disrupted Uk Foreign Office Issues Warning For Popular Holiday Destination

May 08, 2025

Travel Plans Disrupted Uk Foreign Office Issues Warning For Popular Holiday Destination

May 08, 2025 -

Future Of Cost Of Living Payments Dwps Final Decision

May 08, 2025

Future Of Cost Of Living Payments Dwps Final Decision

May 08, 2025