Deploying Meta's Llama 2 With Workers AI: A Step-by-Step Tutorial

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Deploying Meta's Llama 2 with Workers AI: A Step-by-Step Tutorial

The release of Meta's Llama 2 has sent ripples through the AI community, offering a powerful, open-source large language model (LLM) accessible to developers worldwide. But deploying and utilizing this powerful tool effectively can seem daunting. This tutorial provides a clear, step-by-step guide on deploying Llama 2 using the efficiency and scalability of Workers AI, making it easier than ever to integrate this advanced technology into your projects.

Why Choose Workers AI for Llama 2 Deployment?

Several platforms allow Llama 2 deployment, but Workers AI offers compelling advantages:

- Scalability: Handle fluctuating demand effortlessly. Workers AI automatically scales your application based on usage, ensuring consistent performance even during peak times.

- Cost-Effectiveness: Pay only for the resources you consume. This efficient pricing model is perfect for testing and deploying Llama 2 without breaking the bank.

- Ease of Use: Workers AI provides a streamlined developer experience, simplifying the complexities of LLM deployment.

- Global Reach: Deploy your application globally with Workers AI's edge network, ensuring low latency for users worldwide.

Step-by-Step Deployment Guide:

This tutorial assumes you have a basic understanding of JavaScript and command-line interfaces. Let's begin!

Step 1: Setting up your Workers AI Account:

If you don't already have one, create a free account at [Insert Workers AI signup link here]. Familiarize yourself with the Workers AI dashboard and its key features.

Step 2: Choosing the Right Llama 2 Model:

Meta offers various Llama 2 models (7B, 13B, and 70B parameters). For initial deployment and testing, the 7B parameter model is a great starting point due to its smaller size and faster processing times. Larger models require significantly more resources.

Step 3: Preparing your Code:

You'll need to write a Workers AI script that interacts with your chosen Llama 2 model. This script will handle user input, send it to the model for processing, and return the generated response. Here's a simplified example (remember to adapt this based on your specific requirements and chosen Llama 2 model):

addEventListener('fetch', event => {

event.respondWith(handleRequest(event.request))

})

async function handleRequest(request) {

const {prompt} = await request.json(); //Get prompt from user

//Replace with your Llama 2 API call

const response = await fetch('YOUR_LLAMA_2_API_ENDPOINT', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({prompt: prompt})

});

const data = await response.json();

return new Response(JSON.stringify(data));

}

Step 4: Deploying your Worker:

- Create a new Worker: In your Workers AI dashboard, create a new project and upload your JavaScript code.

- Configure Environment Variables: Securely store your Llama 2 API key and other sensitive information as environment variables within your Workers AI project settings.

- Deploy: Click the deploy button and follow the onscreen instructions.

Step 5: Testing and Iteration:

After deployment, thoroughly test your Worker using the provided testing tools within the Workers AI dashboard. Monitor performance metrics and iterate on your code based on the results. Adjust parameters and refine your prompts for optimal results.

Step 6: Scaling your Deployment:

As your application gains traction, Workers AI's automatic scaling ensures your application remains responsive and efficient, even under heavy load.

Conclusion:

Deploying Meta's Llama 2 with Workers AI offers a powerful combination of an advanced open-source LLM and a scalable, user-friendly cloud platform. This tutorial provides a solid foundation for your journey into leveraging the capabilities of Llama 2. Remember to consult the official Llama 2 and Workers AI documentation for more advanced features and troubleshooting. Happy coding!

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Deploying Meta's Llama 2 With Workers AI: A Step-by-Step Tutorial. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

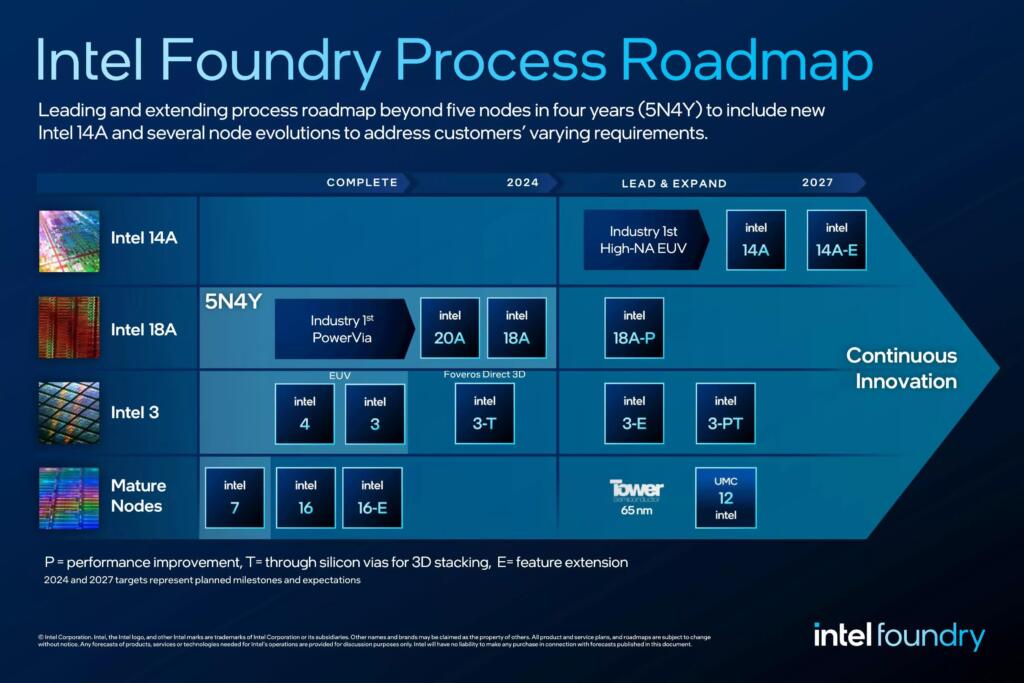

Intels 18 Angstrom Chips A 2025 Production Roadmap

Apr 07, 2025

Intels 18 Angstrom Chips A 2025 Production Roadmap

Apr 07, 2025 -

Stolen Crypto Traced De Fi Transparency Exposes Bybit Hackers

Apr 07, 2025

Stolen Crypto Traced De Fi Transparency Exposes Bybit Hackers

Apr 07, 2025 -

Close Call Abbotsford Canucks Secure 3 2 Win Over Laval Rocket In Shootout

Apr 07, 2025

Close Call Abbotsford Canucks Secure 3 2 Win Over Laval Rocket In Shootout

Apr 07, 2025 -

Ferrari Sf 25 Upgrades Hamilton And Leclercs Urgent Plea

Apr 07, 2025

Ferrari Sf 25 Upgrades Hamilton And Leclercs Urgent Plea

Apr 07, 2025 -

Mac Kenzie Weegars Honest Admission Fabricating Stories When Words Fail

Apr 07, 2025

Mac Kenzie Weegars Honest Admission Fabricating Stories When Words Fail

Apr 07, 2025