Easing AI Safety Concerns: OpenAI's Response To Altman's Authoritarian AGI Risks

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Easing AI Safety Concerns: OpenAI's Response to Altman's Authoritarian AGI Risks

Sam Altman's recent warnings about the potential for authoritarian misuse of advanced Artificial General Intelligence (AGI) have sparked global debate. But OpenAI, the very company he leads, is actively working to mitigate these risks. This article delves into their multifaceted approach, addressing concerns and outlining strategies to ensure the safe and beneficial development of AGI.

The rapid advancements in AI, particularly in the realm of large language models (LLMs), have led to both excitement and apprehension. While the potential benefits are immense, the possibility of powerful AI falling into the wrong hands – as highlighted by OpenAI CEO Sam Altman – is a legitimate and pressing concern. Altman's stark warnings regarding the potential for authoritarian regimes to weaponize AGI have galvanized the tech community and policymakers alike, demanding proactive solutions.

OpenAI's Proactive Measures: A Multi-Pronged Approach

OpenAI isn't simply reacting to the anxieties; they're actively shaping the future of AI safety. Their response is multifaceted, encompassing several key strategies:

-

Strengthening AI Alignment Research: A core focus is aligning advanced AI systems with human values. This involves developing techniques to ensure AI behaves predictably and remains beneficial, even as its capabilities grow exponentially. OpenAI is investing heavily in this crucial area, collaborating with researchers worldwide to explore novel alignment approaches.

-

Robust Safety Protocols and Red Teaming: Rigorous testing and evaluation are paramount. OpenAI employs advanced red teaming strategies, simulating potential misuse scenarios to identify and address vulnerabilities before deployment. This proactive approach aims to minimize risks associated with powerful AI models.

-

Promoting Transparency and Collaboration: OpenAI advocates for transparency within the AI community. Sharing research findings, best practices, and safety protocols fosters collaboration and accelerates progress in responsible AI development. Open communication is vital in mitigating potential dangers.

-

International Collaboration and Policy Engagement: The implications of AGI are global. OpenAI is actively engaged with policymakers and international organizations to develop ethical guidelines and regulations for AI development and deployment. This collaborative approach is essential for establishing a safe and responsible global AI ecosystem.

Addressing Specific Concerns: Beyond the Hype

While the potential for misuse is real, it's crucial to avoid overly sensationalizing the risks. OpenAI's response directly addresses several key concerns:

-

Misinformation and Propaganda: OpenAI is actively working on techniques to detect and mitigate the spread of AI-generated misinformation and propaganda. This involves developing advanced detection algorithms and collaborating with fact-checking organizations.

-

Bias and Discrimination: Addressing bias in AI models is a priority. OpenAI is actively researching methods to mitigate biases in training data and model architectures, ensuring fairer and more equitable outcomes.

-

Autonomous Weapons Systems: OpenAI has been a vocal advocate against the development and deployment of autonomous weapons systems (AWS), emphasizing the inherent dangers of delegating life-or-death decisions to AI.

The Road Ahead: A Collective Responsibility

The development of AGI presents both incredible opportunities and significant challenges. OpenAI's response to Altman's warnings demonstrates a commitment to responsible innovation, emphasizing proactive measures and collaborative efforts. However, addressing the safety concerns surrounding AGI is not solely the responsibility of a single organization; it requires a collective effort from researchers, policymakers, and the broader tech community. The ongoing dialogue and collaborative efforts are crucial to navigating this complex landscape and ensuring a future where AI benefits all of humanity.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Easing AI Safety Concerns: OpenAI's Response To Altman's Authoritarian AGI Risks. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Comprehensive Preview Toulouse Vs Lille Analysis Team News And Score Prediction

Apr 13, 2025

Comprehensive Preview Toulouse Vs Lille Analysis Team News And Score Prediction

Apr 13, 2025 -

Premier League Roundup Arsenal Everton And Key Weekend Results

Apr 13, 2025

Premier League Roundup Arsenal Everton And Key Weekend Results

Apr 13, 2025 -

Limitadas Rotaciones En Butarque Como Afectara Al Leganes

Apr 13, 2025

Limitadas Rotaciones En Butarque Como Afectara Al Leganes

Apr 13, 2025 -

Juventus Starting Xi Revealed Watch The Lecce Match Live

Apr 13, 2025

Juventus Starting Xi Revealed Watch The Lecce Match Live

Apr 13, 2025 -

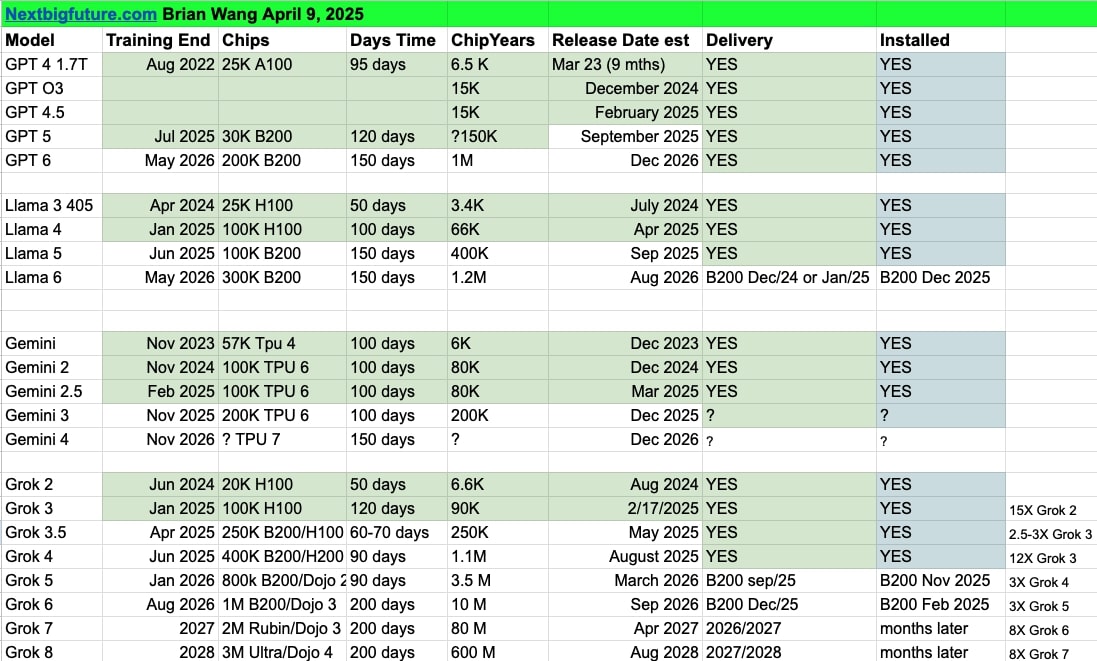

Grok 3 5 And Grok 4 Release Dates X Ais Ai Development Timeline

Apr 13, 2025

Grok 3 5 And Grok 4 Release Dates X Ais Ai Development Timeline

Apr 13, 2025