IPhone Dictation Error: "Trump" Misinterpreted As "Racist"—Apple's Fix

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

iPhone Dictation Error: "Trump" Mistaken for "Racist"—Apple's Swift Response

A recent software glitch in Apple's iPhone dictation feature sparked controversy after users reported the voice-to-text function repeatedly misinterpreting the word "Trump" as "racist." The error, highlighting potential biases in AI algorithms, prompted swift action from Apple.

The incident, which quickly went viral on social media, saw numerous users sharing screenshots and videos demonstrating the unexpected transcription error. The issue wasn't limited to a specific iPhone model or iOS version, suggesting a widespread problem within Apple's dictation algorithm. This raised concerns about the potential for algorithmic bias in widely used technologies and the implications for fair and unbiased communication.

How did it happen? Understanding the AI Glitch

Apple's dictation feature relies on sophisticated machine learning algorithms trained on vast datasets of text and speech. While these algorithms are designed to accurately transcribe speech, they can inherit biases present within the training data. In this case, the algorithm appears to have associated the name "Trump" with the term "racist," likely due to the prevalence of such associations within certain online discussions and news coverage. This highlights a crucial challenge in AI development: ensuring that algorithms are trained on diverse and unbiased data to avoid perpetuating harmful stereotypes.

Apple's Immediate Action: A Patch and Apology

Following the widespread reports, Apple acknowledged the problem and responded rapidly. The company released a software update addressing the dictation error, swiftly rectifying the issue for affected users. While Apple hasn't publicly commented on the specific cause of the error, the speed of their response showcases a commitment to addressing algorithmic biases and maintaining the accuracy of their products. This proactive approach contrasts with some other tech companies’ slower responses to similar controversies.

Beyond the Immediate Fix: Broader Implications for AI Ethics

The "Trump" misinterpretation is a stark reminder of the potential pitfalls of relying on AI-driven technologies without careful consideration of ethical implications. This incident underscores the need for:

- Diverse and unbiased training data: AI algorithms must be trained on data that accurately reflects the diversity of human language and avoids perpetuating harmful stereotypes.

- Rigorous testing and auditing: Before release, AI systems should undergo thorough testing to identify and mitigate potential biases.

- Transparency and accountability: Companies developing AI systems should be transparent about their data sources and algorithms and held accountable for addressing biases.

- Ongoing monitoring and improvement: AI systems are not static; continuous monitoring and updates are crucial to prevent and address emerging biases.

The Future of AI and Bias Mitigation

The iPhone dictation error serves as a cautionary tale, highlighting the ongoing challenges in developing ethical and unbiased AI. While Apple's swift response is commendable, the broader tech industry needs to adopt a more proactive and transparent approach to addressing algorithmic bias to ensure the equitable and fair application of AI in our daily lives. The future of AI depends on addressing these challenges head-on. This case serves as a significant benchmark in the ongoing conversation surrounding responsible AI development and deployment. We will continue to monitor Apple's efforts and the wider technological response to this important issue.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on IPhone Dictation Error: "Trump" Misinterpreted As "Racist"—Apple's Fix. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Unleash The Beast Mini Pc Featuring Intel Core I9 Hk Cpu 24 Tb Ssd And 96 Gb Ram

Feb 28, 2025

Unleash The Beast Mini Pc Featuring Intel Core I9 Hk Cpu 24 Tb Ssd And 96 Gb Ram

Feb 28, 2025 -

Quiz De Personalidad Que Revela Tu Mes De Nacimiento

Feb 28, 2025

Quiz De Personalidad Que Revela Tu Mes De Nacimiento

Feb 28, 2025 -

The Power Of Vulnerability Doom Patrols Realistic Depiction Of Trauma

Feb 28, 2025

The Power Of Vulnerability Doom Patrols Realistic Depiction Of Trauma

Feb 28, 2025 -

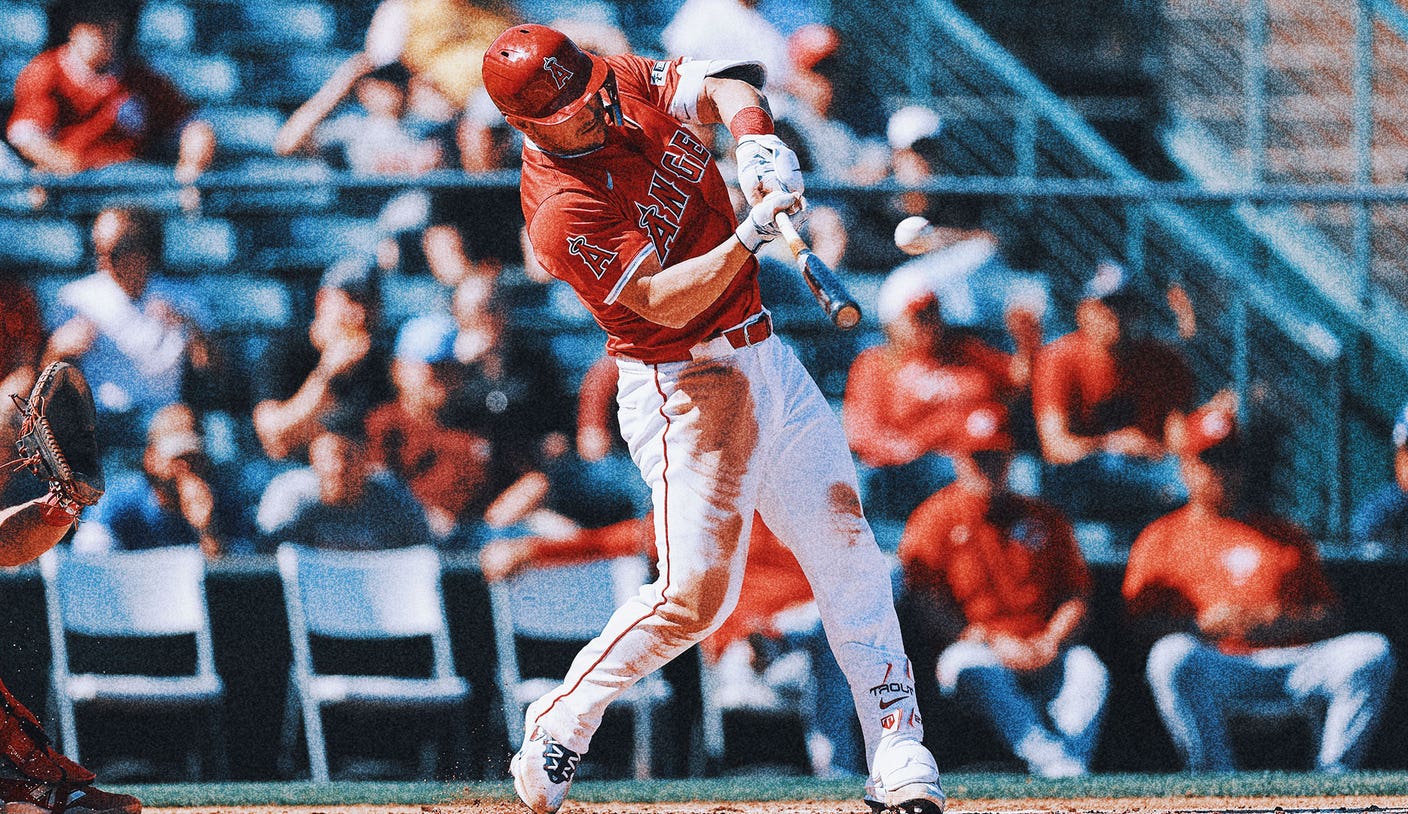

Mike Trouts First Spring Training Homer It Felt Good

Feb 28, 2025

Mike Trouts First Spring Training Homer It Felt Good

Feb 28, 2025 -

Miamis 10 Leading Sports Stars A Current Roster

Feb 28, 2025

Miamis 10 Leading Sports Stars A Current Roster

Feb 28, 2025