Llama 4's Multimodal AI: A Paradigm Shift In Artificial Intelligence

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Llama 4's Multimodal AI: A Paradigm Shift in Artificial Intelligence

The AI landscape is undergoing a seismic shift, and Meta's latest creation, Llama 4, is leading the charge. This isn't just another incremental improvement; Llama 4's multimodal capabilities represent a paradigm shift in how we interact with and understand artificial intelligence. Gone are the days of strictly text-based interactions; Llama 4 seamlessly integrates text, images, and potentially even audio and video, opening doors to applications previously confined to the realm of science fiction.

What Makes Llama 4 Multimodal AI Different?

Unlike its predecessors and many other current AI models, Llama 4 isn't limited to processing a single type of data. This multimodal approach allows for a far richer and more nuanced understanding of information. Imagine an AI that can not only read and understand a text description of a scene but also analyze a corresponding image, identifying details that might be missed by a purely text-based model. This opens exciting new avenues in various sectors.

- Enhanced Understanding of Context: By processing multiple modalities simultaneously, Llama 4 gains a deeper comprehension of context. This leads to more accurate and insightful results, especially in complex tasks requiring the integration of various data types.

- Improved Accuracy and Efficiency: The fusion of information from different modalities often leads to improved accuracy and efficiency. For example, an image can clarify ambiguities present in text, leading to faster and more reliable processing.

- New Applications and Possibilities: The multimodal nature of Llama 4 unlocks a plethora of new applications across various fields, from medical diagnosis using image analysis and patient records to advanced robotics and more intuitive virtual assistants.

Revolutionizing Industries with Multimodal AI

The implications of Llama 4's multimodal capabilities are far-reaching and transformative:

- Healthcare: Analyzing medical images (X-rays, CT scans) alongside patient records could significantly improve diagnostic accuracy and speed.

- Education: Interactive learning experiences combining text, images, and potentially even videos could create more engaging and effective educational tools.

- Automotive: Self-driving cars could benefit from a more comprehensive understanding of their surroundings by processing visual data (images from cameras) alongside sensor data and map information.

- Customer Service: AI-powered chatbots could provide more personalized and effective support by understanding not just the text of a customer's query but also accompanying images or videos.

Challenges and Considerations

While the potential benefits are immense, the development and deployment of multimodal AI models like Llama 4 also present certain challenges:

- Data Requirements: Training a robust multimodal AI model requires vast amounts of high-quality, multi-modal data, which can be expensive and difficult to acquire.

- Computational Resources: Processing multiple modalities simultaneously demands significant computational power, which can be a barrier to entry for smaller organizations.

- Ethical Concerns: As with all powerful AI technologies, ethical considerations related to bias, privacy, and potential misuse need careful attention.

The Future of Multimodal AI

Llama 4 represents a significant leap forward in AI technology. Its multimodal capabilities are poised to revolutionize numerous industries, creating new possibilities and solving complex problems in ways previously unimaginable. While challenges remain, the future of AI is undoubtedly multimodal, and Llama 4 is setting the stage for a new era of intelligent systems. Further research and development will undoubtedly refine its capabilities, pushing the boundaries of what's possible and continuing to redefine the landscape of artificial intelligence. The implications are vast, and the journey is only just beginning.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Llama 4's Multimodal AI: A Paradigm Shift In Artificial Intelligence. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Update Irs Reinstates Probationary Employees Mid April Work Resumption

Apr 07, 2025

Update Irs Reinstates Probationary Employees Mid April Work Resumption

Apr 07, 2025 -

Malta Imposes 1 2 Million Fine On Crypto Exchange Okx

Apr 07, 2025

Malta Imposes 1 2 Million Fine On Crypto Exchange Okx

Apr 07, 2025 -

Breaking News Late Mail Reveals Manly Sea Eagles Line Up For Round 5

Apr 07, 2025

Breaking News Late Mail Reveals Manly Sea Eagles Line Up For Round 5

Apr 07, 2025 -

Review The Minecraft Movie A Surprisingly Engaging Adaptation

Apr 07, 2025

Review The Minecraft Movie A Surprisingly Engaging Adaptation

Apr 07, 2025 -

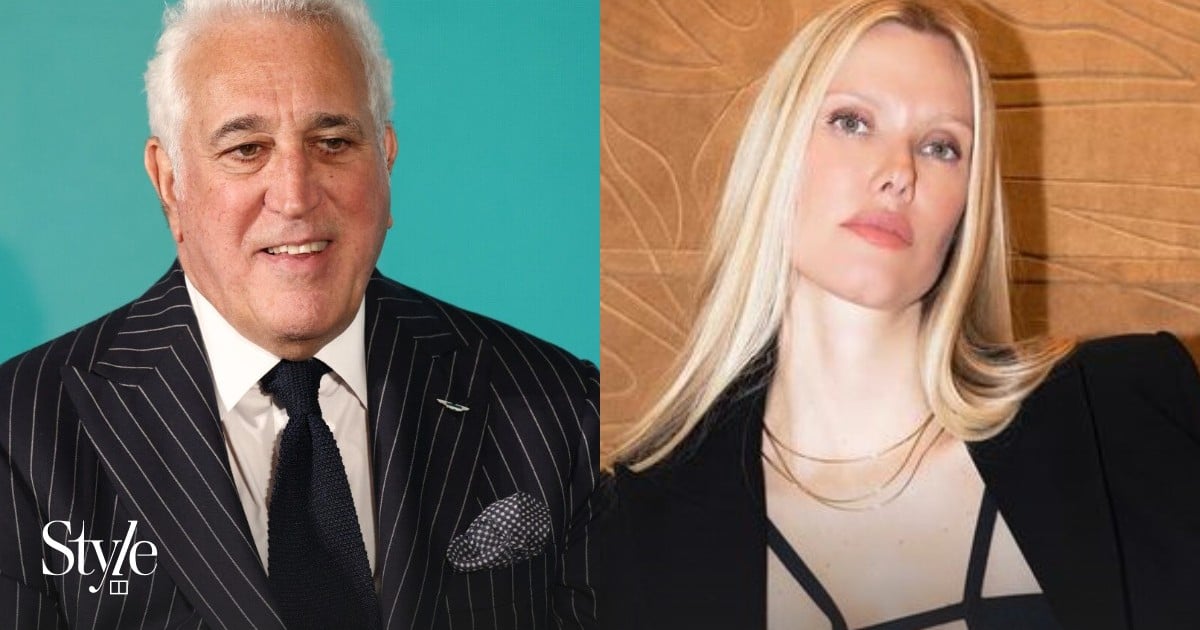

Meet Raquel Stroll Wife Of Aston Martins Lawrence Stroll And Designer Extraordinaire

Apr 07, 2025

Meet Raquel Stroll Wife Of Aston Martins Lawrence Stroll And Designer Extraordinaire

Apr 07, 2025