Open-Source LLM Race: Qwen 3 And Qwen 2.5 Coder Surpass DeepSeek And Meta

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Open-Source LLMs Heat Up: Alibaba's Qwen 3 and Qwen 2.5 Coder Outpace DeepSeek and Meta's Models

The race in the open-source large language model (LLM) arena is intensifying, with Alibaba's latest offerings, Qwen 3 and Qwen 2.5 Coder, emerging as strong contenders. Recent benchmark tests reveal that these models are outperforming previously leading open-source options like DeepSeek and models from Meta, signifying a significant shift in the landscape of accessible AI technology. This development has major implications for researchers, developers, and businesses seeking powerful, adaptable, and cost-effective AI solutions.

Qwen 3: A Giant Leap in Open-Source Capabilities

Alibaba's Qwen 3 stands out for its impressive performance across a range of tasks. Benchmark tests, including those evaluating reasoning and coding abilities, show a considerable improvement over previous generations of open-source LLMs. This leap forward is attributed to several factors, including advancements in model architecture and the extensive training data used. Specifically, Qwen 3 demonstrates enhanced capabilities in:

- Complex Reasoning: Successfully tackling more intricate logical problems than its predecessors.

- Natural Language Understanding: Demonstrating a deeper understanding of nuanced language and context.

- Code Generation: Exhibiting improved accuracy and efficiency in generating code across various programming languages.

This superior performance positions Qwen 3 as a compelling alternative to proprietary models, offering a powerful open-source solution without the limitations and costs associated with closed-source alternatives. The availability of Qwen 3 under an open-source license fosters collaboration and innovation within the AI community, accelerating the pace of development and democratizing access to cutting-edge AI technology.

Qwen 2.5 Coder: A Specialized Powerhouse for Developers

While Qwen 3 excels in general-purpose tasks, Qwen 2.5 Coder is specifically designed for software development. This specialized model focuses on code generation, debugging, and understanding programming concepts. Its strengths lie in:

- Faster Code Completion: Providing developers with quicker and more accurate code suggestions.

- Improved Code Debugging: Identifying and suggesting fixes for errors more efficiently.

- Multi-lingual Code Support: Handling code written in multiple programming languages.

The release of Qwen 2.5 Coder is a significant boon for the developer community, offering a powerful tool to enhance productivity and streamline the software development lifecycle. Its specialized capabilities make it a highly competitive option compared to other open-source and commercial coding assistants.

The Implications of Alibaba's Advancements

The superior performance of Qwen 3 and Qwen 2.5 Coder compared to DeepSeek and Meta's models underscores the rapidly evolving nature of the open-source LLM landscape. Alibaba's contribution significantly raises the bar, pushing other developers and researchers to further innovate and improve their own offerings. This competitive environment benefits everyone, driving faster progress and making more advanced AI tools accessible to a wider audience.

The open-source nature of these models is particularly crucial. It promotes transparency, allowing researchers to scrutinize the models' inner workings and identify potential biases or vulnerabilities. This collaborative approach ensures responsible development and deployment of powerful AI technologies. The availability of Qwen 3 and Qwen 2.5 Coder marks a significant step towards a more equitable and inclusive AI future. The race is far from over, but Alibaba has undoubtedly taken a commanding lead in the open-source LLM competition.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Open-Source LLM Race: Qwen 3 And Qwen 2.5 Coder Surpass DeepSeek And Meta. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Beyond Bollywood Mukul Devs Life As A Trained Pilot

May 25, 2025

Beyond Bollywood Mukul Devs Life As A Trained Pilot

May 25, 2025 -

Concerning Update On Pete Dohertys Health From Fellow Libertine

May 25, 2025

Concerning Update On Pete Dohertys Health From Fellow Libertine

May 25, 2025 -

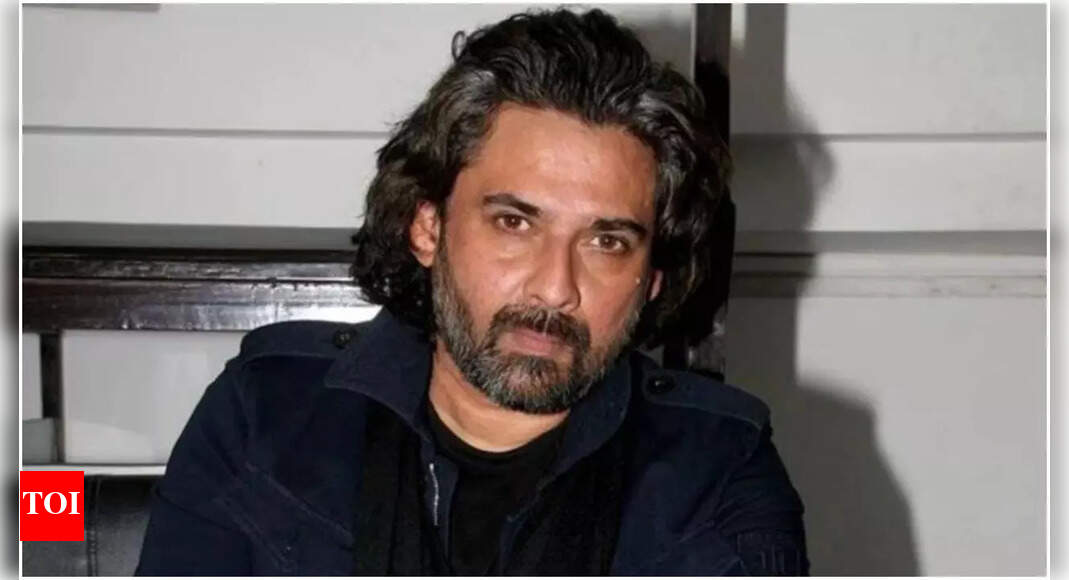

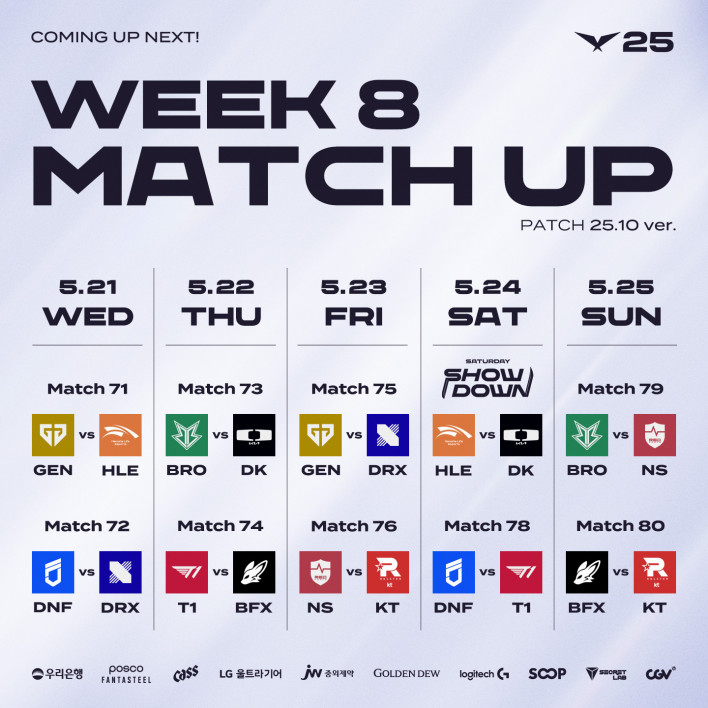

Hles Redemption Arc Facing Gen G And Dplus Kia In Lcks Patch 25 10 Meta

May 25, 2025

Hles Redemption Arc Facing Gen G And Dplus Kia In Lcks Patch 25 10 Meta

May 25, 2025 -

Mel Gibson Guns And A Trump Firing The Untold Story

May 25, 2025

Mel Gibson Guns And A Trump Firing The Untold Story

May 25, 2025 -

Dont Miss These 5 Modern Metal Bands At Slam Dunk This Year

May 25, 2025

Dont Miss These 5 Modern Metal Bands At Slam Dunk This Year

May 25, 2025

Latest Posts

-

Annabelle Doll Missing A New Orleans Mystery Deepens

May 25, 2025

Annabelle Doll Missing A New Orleans Mystery Deepens

May 25, 2025 -

Two Massive B C Lottery Wins In One Month 40 Million Jackpot And A Record Prize

May 25, 2025

Two Massive B C Lottery Wins In One Month 40 Million Jackpot And A Record Prize

May 25, 2025 -

Nyt Wordle May 24 2024 Game 1435 Solution And Helpful Hints

May 25, 2025

Nyt Wordle May 24 2024 Game 1435 Solution And Helpful Hints

May 25, 2025 -

Cricket Prodigy Ayush Mhatre Stuns With 28 Run Over Against Arshad Khan

May 25, 2025

Cricket Prodigy Ayush Mhatre Stuns With 28 Run Over Against Arshad Khan

May 25, 2025 -

Monaco Grand Prix 2025 Live Updates Results And Race Analysis

May 25, 2025

Monaco Grand Prix 2025 Live Updates Results And Race Analysis

May 25, 2025