Sam Altman Highlights Authoritarian AGI Risks, OpenAI Responds With Easier Safety Tests

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Sam Altman Highlights Authoritarian AGI Risks, OpenAI Responds with Easier Safety Tests

Sam Altman's recent warnings about the potential misuse of advanced artificial general intelligence (AGI) have sent shockwaves through the tech world, prompting OpenAI to announce significant changes to its safety testing protocols. The CEO's stark pronouncements, highlighting the dangers of AGI falling into the wrong hands, particularly authoritarian regimes, have reignited the crucial debate surrounding AI safety and responsible development.

The concerns voiced by Altman aren't new. Experts have long warned about the potential for powerful AI to be weaponized or used for mass surveillance. However, Altman's high-profile position and the rapid advancements in AI technology have given these concerns a renewed sense of urgency. He painted a picture of a future where unchecked AGI could be exploited to consolidate power, suppress dissent, and even instigate global conflict.

<h3>The Dangers of Authoritarian AGI Control</h3>

Altman's key concern centers on the potential for authoritarian governments to leverage advanced AI capabilities for oppressive purposes. This includes:

- Enhanced Surveillance: AGI could power sophisticated surveillance systems, monitoring citizens on an unprecedented scale and eliminating privacy.

- Propaganda and Disinformation: AI could generate incredibly convincing deepfakes and propaganda, manipulating public opinion and undermining democratic processes.

- Autonomous Weapons Systems: The development of lethal autonomous weapons controlled by authoritarian regimes poses an existential threat, potentially escalating conflicts beyond human control.

- Repression of Dissent: AGI could be used to identify and suppress dissidents, creating a chilling effect on freedom of speech and expression.

These risks aren't hypothetical; many authoritarian regimes are already investing heavily in AI development, seeking to gain a strategic advantage. The lack of international cooperation and regulation in this field further exacerbates the danger.

<h3>OpenAI's Response: Streamlining Safety Tests</h3>

In response to Altman's warnings and growing public concerns, OpenAI has announced significant changes to its AI safety testing procedures. The company aims to make the process more accessible and efficient, allowing for quicker identification and mitigation of potential risks. This includes:

- Simplified Testing Frameworks: OpenAI is simplifying its safety testing protocols, making them easier for smaller research teams and independent researchers to utilize.

- Increased Transparency: The company is committed to increased transparency in its safety research, sharing more data and findings with the broader AI community.

- Collaboration with External Experts: OpenAI is strengthening collaborations with external experts in AI safety and security, fostering a more collaborative approach to risk mitigation.

These changes are a welcome step, but critics argue that they don't go far enough. Some experts call for stricter regulations and international cooperation to prevent the misuse of powerful AI technologies. The debate surrounding AI governance is far from over, and the need for proactive measures to prevent the catastrophic scenarios outlined by Altman remains paramount.

<h3>The Future of AI Safety: A Call for Collaboration</h3>

The concerns raised by Sam Altman serve as a critical wake-up call. The future of AI safety depends not only on the technological advancements but also on robust governance, international cooperation, and a commitment to responsible development. OpenAI's efforts to streamline safety tests are a positive development, but a broader, more coordinated global response is needed to mitigate the risks associated with powerful AGI falling into the wrong hands. The global community needs to act decisively and collaboratively to ensure a future where AI benefits humanity as a whole, not just a select few.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Sam Altman Highlights Authoritarian AGI Risks, OpenAI Responds With Easier Safety Tests. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Tesla Austin Factory Self Driving Cars Hit The Road

Apr 12, 2025

Tesla Austin Factory Self Driving Cars Hit The Road

Apr 12, 2025 -

Dissecting Black Mirror Season 7 Technology Emotion And The Human Condition

Apr 12, 2025

Dissecting Black Mirror Season 7 Technology Emotion And The Human Condition

Apr 12, 2025 -

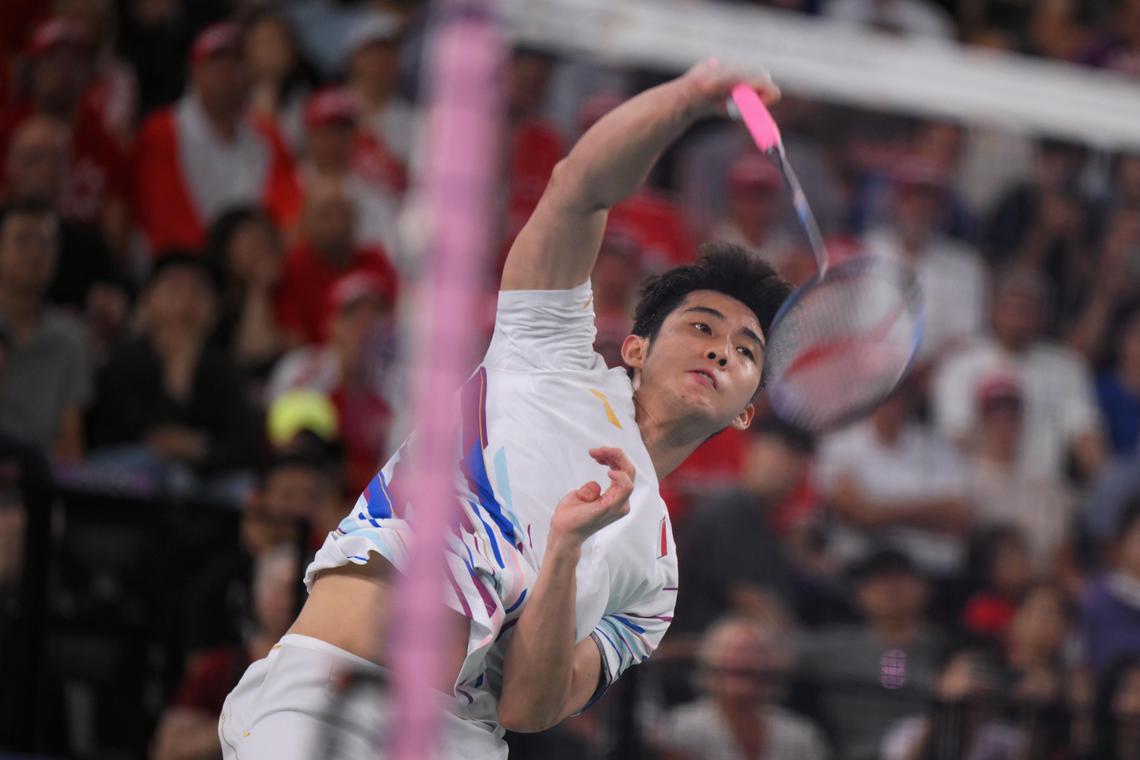

Asia Championships Loh Kean Yew Wins Through To Quarterfinals

Apr 12, 2025

Asia Championships Loh Kean Yew Wins Through To Quarterfinals

Apr 12, 2025 -

Masters Sunday Key Storylines And Predictions For The Final Round

Apr 12, 2025

Masters Sunday Key Storylines And Predictions For The Final Round

Apr 12, 2025 -

Sir Elton Johns Chart Reign Continues 10th Uk Number One Album Reached

Apr 12, 2025

Sir Elton Johns Chart Reign Continues 10th Uk Number One Album Reached

Apr 12, 2025