The Perils Of Open AI Models In Decentralized Applications

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

The Perils of Open AI Models in Decentralized Applications: A Growing Security Concern

The decentralized application (dApp) landscape is booming, promising transparency, security, and user control. However, the integration of OpenAI models, while offering exciting possibilities, introduces a new layer of complexity and significant security risks. This article explores the potential perils of incorporating these powerful AI models into the decentralized world.

H2: The Allure of AI in Decentralized Finance (DeFi) and Beyond

OpenAI models offer the potential to revolutionize dApps. Imagine AI-powered oracles providing more accurate and timely data, automated trading bots optimizing investment strategies, or sophisticated fraud detection systems enhancing security. The possibilities seem limitless. However, this integration is not without its challenges.

H2: Security Risks Posed by OpenAI Model Integration

The very nature of open-source AI models and their potential for manipulation poses a critical threat. Several key concerns emerge:

-

Model Poisoning: Malicious actors could manipulate the training data of open AI models, subtly influencing their outputs to gain an unfair advantage. This could lead to inaccurate predictions in DeFi applications, causing significant financial losses for users. Imagine a poisoned model consistently predicting a false market trend, leading to cascading liquidations.

-

Data Breaches and Privacy Violations: Decentralized applications often handle sensitive user data. Integrating OpenAI models increases the attack surface, potentially exposing this data to breaches. The model itself could become a target, with hackers attempting to extract sensitive information or manipulate its behavior.

-

Lack of Transparency and Verifiability: The "black box" nature of some AI models makes it difficult to understand their decision-making process. This lack of transparency makes it challenging to identify and mitigate vulnerabilities. In a decentralized system where trust is paramount, this lack of verifiability is a major concern.

-

Smart Contract Vulnerabilities: Integrating AI models often requires complex smart contracts. Any vulnerability in these contracts could be exploited, leading to the theft of funds or the manipulation of the dApp's functionality. The complexity introduced by AI increases the potential for unforeseen vulnerabilities.

-

Dependence on Centralized Infrastructure: While aiming for decentralization, many OpenAI models rely on centralized cloud infrastructure for training and deployment. This introduces a single point of failure and undermines the very principles of decentralization.

H2: Mitigation Strategies and Best Practices

While the risks are real, they are not insurmountable. Several strategies can help mitigate the security concerns:

-

Formal Verification: Rigorous mathematical verification of smart contracts is crucial to ensure their security and prevent exploits.

-

Robust Auditing: Independent security audits of AI models and their integration into dApps are necessary to identify and address vulnerabilities before deployment.

-

Decentralized Model Training: Exploring methods to train AI models in a decentralized manner can reduce reliance on centralized infrastructure and improve transparency.

-

Data Privacy Enhancements: Employing advanced encryption and privacy-preserving techniques is essential to protect user data.

-

Continuous Monitoring and Updates: Regular monitoring of the AI model's performance and prompt updates to address discovered vulnerabilities are critical.

H2: The Future of AI and Decentralization

The integration of OpenAI models in decentralized applications holds immense potential. However, addressing the inherent security risks is crucial for the responsible development and adoption of this technology. By prioritizing security, transparency, and decentralization, we can unlock the transformative power of AI while mitigating the potential perils. The future of dApps depends on navigating this complex landscape responsibly. Ignoring these security concerns risks undermining the trust and integrity of the entire decentralized ecosystem.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on The Perils Of Open AI Models In Decentralized Applications. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

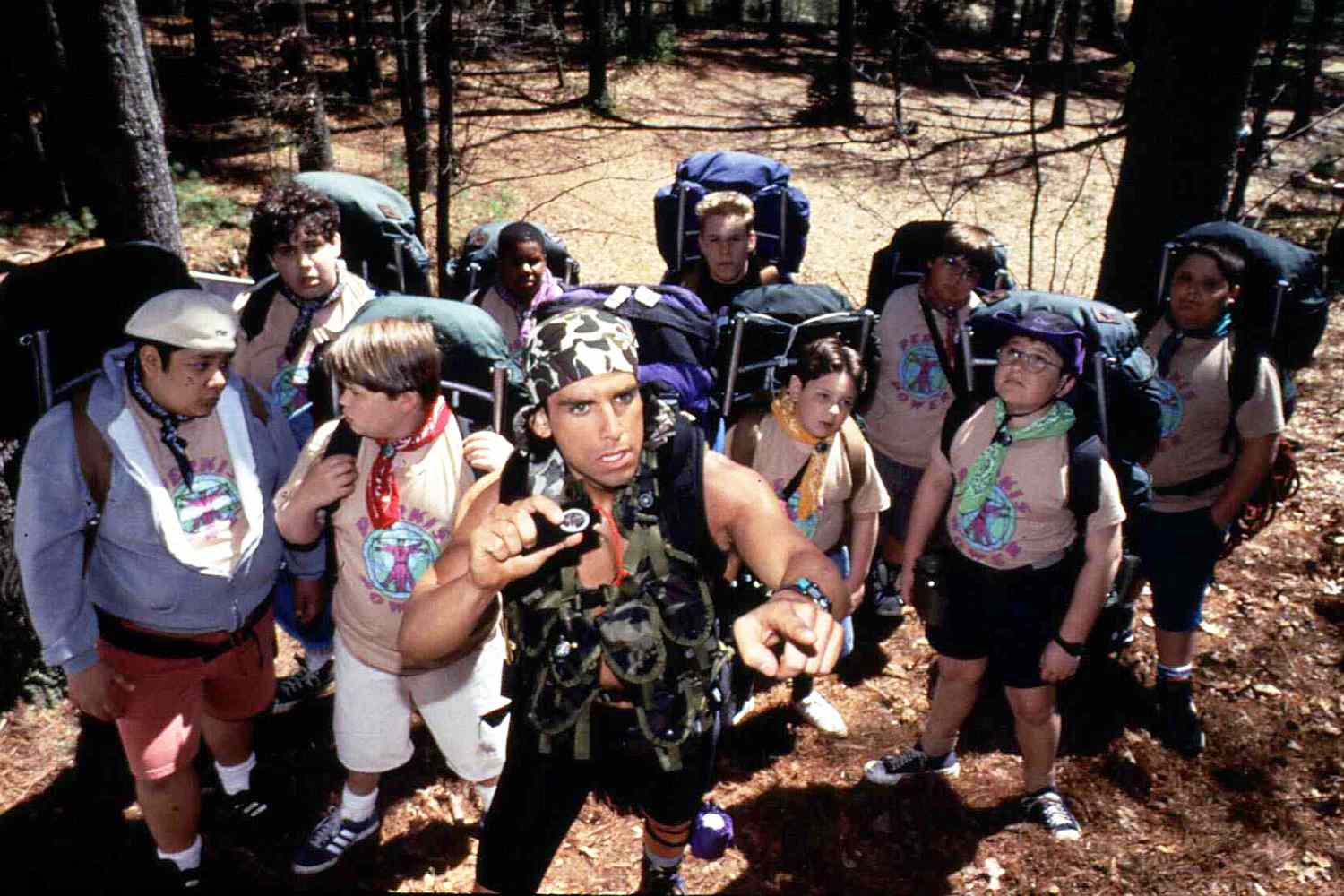

Shaun Weiss Reveals Ben Stillers On Set Behavior During Heavyweights Filming

May 04, 2025

Shaun Weiss Reveals Ben Stillers On Set Behavior During Heavyweights Filming

May 04, 2025 -

Heated Debate Ex Nba Star Ranks Kawhi Leonard Above A Boston Celtics Icon

May 04, 2025

Heated Debate Ex Nba Star Ranks Kawhi Leonard Above A Boston Celtics Icon

May 04, 2025 -

Containing Cale Makar Top Four Defensive Approaches

May 04, 2025

Containing Cale Makar Top Four Defensive Approaches

May 04, 2025 -

Met Gala 2024 How Black Dandyism Defined The Red Carpet

May 04, 2025

Met Gala 2024 How Black Dandyism Defined The Red Carpet

May 04, 2025 -

A Dancers Resilience Transforming Hardship Into Triumph

May 04, 2025

A Dancers Resilience Transforming Hardship Into Triumph

May 04, 2025