"Trump" To "Racist": Apple Fixes Dictation Software Glitch

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit NewsOneSMADCSTDO now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Trump to "Racist": Apple Fixes Dictation Software Glitch

Apple has quietly addressed a concerning bug in its dictation software that was reportedly transcribing the word "Trump" as "racist." The error, discovered and highlighted by several users on social media, sparked immediate outrage and raised questions about potential biases embedded within AI algorithms. This incident underscores the growing need for robust testing and ethical considerations in the development of voice recognition technology.

The glitch, affecting both iOS and macOS devices, appeared inconsistent, with some users reporting the issue consistently while others experienced no problems. However, the sheer number of reports and the inflammatory nature of the misinterpretation caused significant concern. The swift action by Apple to rectify the situation suggests the company recognizes the severity of the error and its potential impact on public perception.

How Did the Glitch Occur?

The exact cause of the dictation error remains unclear. Experts speculate that the algorithm, trained on vast amounts of text and audio data, may have encountered a skewed dataset containing disproportionately negative associations between the word "Trump" and the term "racist." This highlights a critical challenge in AI development: ensuring the training data is diverse, representative, and free from inherent biases. The reliance on large language models (LLMs) and machine learning means that biases present in the data can be amplified and reflected in the output.

Apple's Response and the Broader Implications

Apple hasn't publicly commented on the specifics of the bug fix, but users have reported that the issue has been resolved following a software update. This rapid response is commendable, demonstrating a commitment to addressing user concerns and maintaining the integrity of their products.

However, this incident serves as a stark reminder of the potential pitfalls of relying on AI-powered technologies. The misinterpretation of "Trump" as "racist" raises serious questions about:

- Bias in AI algorithms: The incident underscores the need for rigorous auditing of training datasets to identify and mitigate bias.

- Transparency in AI development: More transparency is needed regarding the processes and data used in training these algorithms.

- Ethical implications of AI: The potential for AI to perpetuate and amplify societal biases demands careful ethical consideration.

This isn't the first time AI software has exhibited biased behavior. Similar incidents have been reported with facial recognition technology and other AI-powered systems, highlighting the need for a more comprehensive approach to ethical AI development. Moving forward, greater emphasis should be placed on:

- Diverse and representative datasets: Training data must accurately reflect the diversity of the real world.

- Regular audits and testing: Continuous monitoring and testing are crucial to identify and correct biases.

- Human oversight: Human intervention and oversight are essential to ensure responsible AI development.

The "Trump" to "racist" dictation glitch serves as a cautionary tale. It highlights the importance of addressing bias in AI algorithms and underscores the need for a more responsible and ethical approach to the development and deployment of AI-powered technologies. The quick resolution by Apple is a positive step, but the broader implications of this incident demand continued attention and proactive measures within the tech industry.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on "Trump" To "Racist": Apple Fixes Dictation Software Glitch. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

The Rise Of Black Family Travel Exploring The Reasons Behind The Surge

Feb 28, 2025

The Rise Of Black Family Travel Exploring The Reasons Behind The Surge

Feb 28, 2025 -

Test De Txt Adivina Tu Bias Con Este Cuestionario

Feb 28, 2025

Test De Txt Adivina Tu Bias Con Este Cuestionario

Feb 28, 2025 -

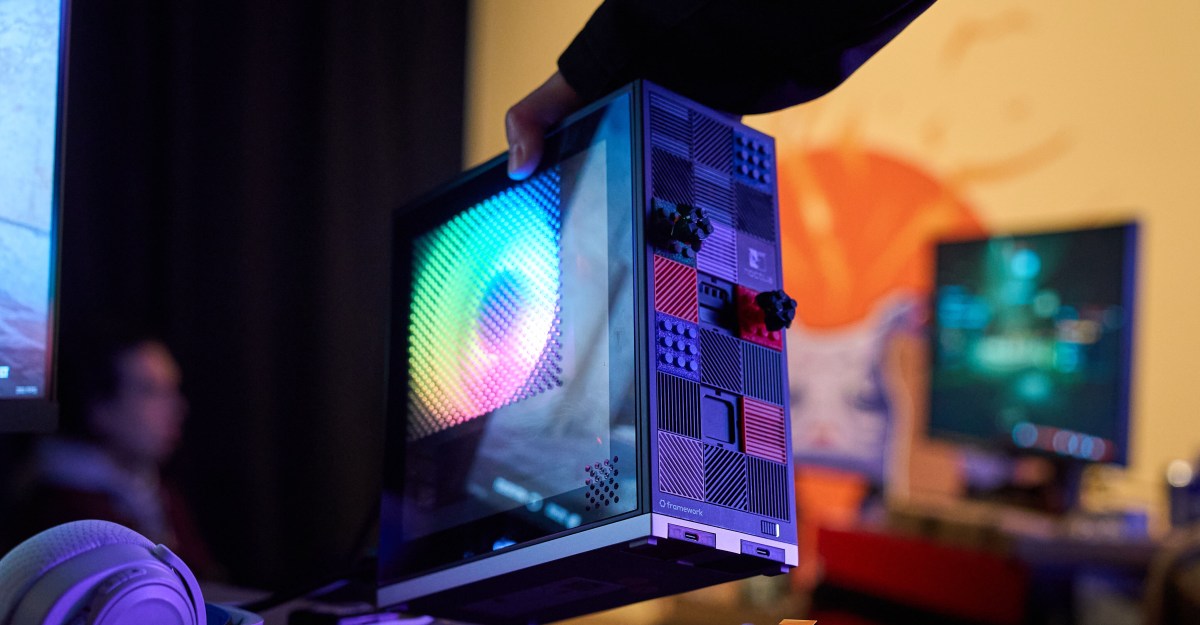

Framework Desktop Hands On Review A New Gaming Pc Paradigm

Feb 28, 2025

Framework Desktop Hands On Review A New Gaming Pc Paradigm

Feb 28, 2025 -

Controversy Erupts Nikes Caitlin Clark Deal Sparks Debate Among Fans

Feb 28, 2025

Controversy Erupts Nikes Caitlin Clark Deal Sparks Debate Among Fans

Feb 28, 2025 -

Is A Samsung Galaxy Z Flip 7 Coming Soon Latest Updates And Speculation

Feb 28, 2025

Is A Samsung Galaxy Z Flip 7 Coming Soon Latest Updates And Speculation

Feb 28, 2025